Software is transforming and accelerating organisations of all kinds, both directly and indirectly. Software is a key differentiator for organisations to offer value to customers and shareholders. The practices that we use, things like sprint planning and stand-up meetings to pick just two, have been widely adopted outside the IT field. This gives those of us who work in the field in any capacity an outsized influence over what the future looks like. That’s a wonderful thing, but we’d better use it wisely.

It can be hard sometimes to realise how far we’ve come. Twenty years ago I was doing my first professional programming job. I didn’t have access to the internet at work. The system I worked on, which ran HR for a mid-sized investment bank, got production releases four times a year. Planning, which seemed to take forever, was based on Prince2. Integrating code changes took weeks. Deployments, which required an outage, took days. If HR needed a new feature, it took around 12 months on average to make it into production, but it could be much longer. In my first month in the job I sat in a meeting in which the head of payroll described a change she needed urgently. It was still in testing when I left four years later.About ten years ago I remember sitting in a software conference, hearing someone who worked at Amazon talking about an automated deployment pipeline that allowed them to make multiple production releases every day. It sounded like science fiction. The firm I worked for at the time was doing a production build every two weeks. We thought we were pretty cutting edge.

Our industry moves fast.

A challenge for all of us within it, whether engineers, marketers, team leads, or senior leaders, is that new ideas, architectural patterns, frameworks and libraries arrive so quickly it can feel impossible to keep up.

Whereas other industries introduce disruptive innovations only rarely, the software industry does so routinely and with a high degree of, often misguided, confidence. Moreover, effecting change, whether that is adopting a new technology or effecting organisational change in a company of any size, takes time. Technology leaders need to be able to make bets on likely winners really early to gain any sort of competitive advantage.

One side effect of this is that we need a marketing model that supports this kind of product introduction. The Technology Adoption Lifecycle is the one that gets most widely used.

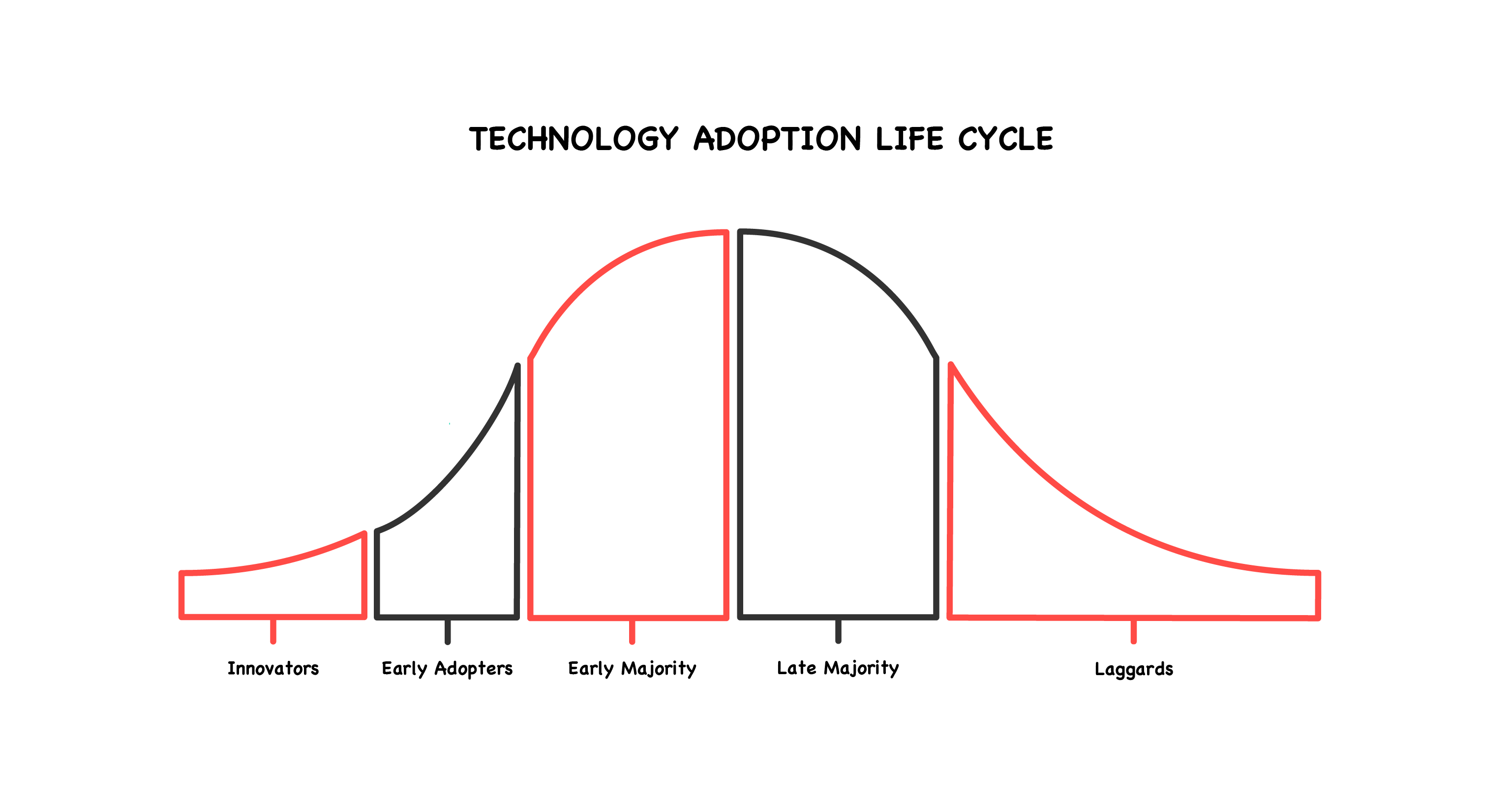

The model describes the market penetration of any new technology product in terms of a progression it takes throughout its useful life. It shows a skewed bell curve where the divisions in the curve are roughly equivalent to where the standard deviations would fall. That is, the early majority and late majority fall within one standard deviation of the mean, the early adopters and laggards within two, and the innovators at three.

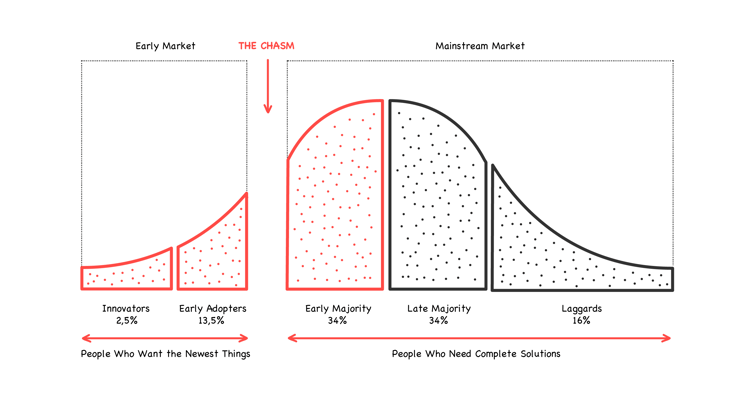

Over time, variations of the standard bell curve have arisen, mostly famously Geoffrey Moore’s one from “Crossing the Chasm”. Moore observed that there were cracks in the graph between each of the standard deviation points. However the key thing that Moore noticed was that there was a huge chasm between early adopters and early majority. Many innovations never get to any level of mass adoption. “This is by far the most formidable and unforgiving transition in the Technology Adoption Life Cycle,” Moore writes, “and it is all the more dangerous because it often goes unnoticed.”

When considering this model from an enterprise software perspective, it is typically the case that the firms looking to adopt technology in the innovator and early adopter stages are those seeking competitive advantage. For example, Netflix chose to shift to what we now call microservices to allow them to execute faster than the much larger companies they were competing with. Other firms in Silicon Valley, such as Amazon, Google and eBay, independently arrived at more-or-less the same architectural pattern at roughly the same time, though not always for identical reasons. Many did so to deal with the horizontal scaling challenges of finding themselves operating at planetary scale, with team autonomy and speed of deployment a secondary, but useful side-effect. In each case the advantages outweighed the additional operational complexity that was being introduced, at least for a time.

Conversely those who adopt later - at early majority and beyond - tend to do so for one of two reasons: either they are in a dominant position in their market, and are therefore seeking proof of the effectiveness of a technology or strategy prior to adoption; or they are responding to a new competitor entering their market using newer technologies. Examples of the latter include Starling and the other challenger banks in the UK, or the Wealth Grid story from our own O'Reilly book.

You might imagine that being first is always an advantage, but being later can come with its own advantages. In the case of microservices, adopting now would mean you were already aware of the common pitfalls as there are plenty of case studies to draw on. You wouldn’t necessarily need to develop as much software from scratch: unlike say Netflix, you shouldn’t have to build your own monitoring system in order to get observability into your services. There are also plenty of frameworks designed to help with the basics including Spring Boot, Quarkus, and Helidon on the JVM, Go kit for Go, Flask for Python, Node.js for JavaScript and so on.

Being later can also allow you to skip some steps: the BBC’s cloud migration, for example, saw them go straight from a fairly conventional LAMP stack to using Serverless/FaaS - specifically AWS Lambda - with fewer intermediate steps than the earlier adopters of the cloud needed.

But regardless of your organisation's tech strategy, picking the right technologies is hard.

If your crystal ball is a bit cloudy, and you don’t have access to a reliable fortune teller, one approach is through experimentation, guided by tools such as ThoughtWorks’ Technology Radar, CNCF’s End User Technology Radar, our own Container Solutions Radar, and InfoQ’s Trend Reports. Techniques such as Wardley mapping can also be invaluable.

Within this WTF collection we wanted to explore some of the trends we feel are important in the cloud computing space. We’re also considering what the future of work might look like, as we can finally start to imagine life beyond the pandemic. Some key pieces this month:

10 Predictions for the Future of Computing or; the Inane Ramblings of our Chief Scientist: Adrian Mouat, our chief scientist, looks at what he believes to be the most important trends in languages, runtimes, deployment platforms and more.

The Reality of Hybrid Working: Hybrid working is likely to be the main way we work for the next year or two. But it’s usually the most problematic of all working models, amplifying existing problems.

WTF is Cloud Native Quantum? Quantum computers represent an extraordinary feat of engineering. IBM’s Dr Holly Cummins explains their potential, how they work, why they are such a great fit for the cloud, and what they have to do with cats.

Why I Stopped Using POST and Learned to Love HTTP: internationally known author and speaker Mike Amundsen proposes abandoning POST as a solution to the Lost Response problem in HTTP-based web services.

Is It Time for a More Sustainable Relationship with Tech? In the first of two blog posts Anne Currie, our chief ethicist, wonders if we have reached the point where we will finally transition our energy to something cleaner and, if we have, what that might mean for tech.

Previous article

Previous article