“When railroading time comes you can railroad—but not before.” ― Robert A. Heinlein

In his SF novel ‘The Door Into Summer’, Heinlein reckoned that the time had to be right for particular forms of technological change. Until the requisite foundation of tech and culture was in place, it wouldn’t happen.

The question is, have we reached the point where we will finally transition our energy to something cleaner? And what will that mean for the tech industry?

A Little Less Conversation A Little More Action

At their last Re:Invent conference in the autumn of 2020, Amazon proclaimed that it had finally joined Microsoft and Google in committing to a zero carbon cloud by 2030.

Earlier that year, a zero carbon target was announced by China and, under Joe Biden, the US is making major commitments in that direction. So is the EU.

Has carbon zeroing time come?

It’s Not You, It’s Me

One thing we’ve learned from the Covid pandemic is that staying at home, not taking any flights, and massively curtailing our lifestyles barely moved the dial on climate change. Carbon emission is so baked into our way of life that individual action makes almost no difference. Fixing it will require fundamental change that will affect every industry - including tech.

What Happened To Us?

Stepping back, why does the world even still use fossil fuels!? We’ve known there was a greenhouse gas issue for over half a century! The reason is, they’re hard to leave.

In every other respect, fossil fuels are bloody good and it’s not surprising that our entire modern society is built on them.

The thing about oil, petrol, and gas is they’re cheap, reliable, dense, and predictable. And those are compliments. They’re the reason power is available at the flick of a switch. The problem is, they’re also toxic. We need to walk away, but let’s not kid ourselves. It’s going to be tough.

At least for the next decade or two, we aren’t going to meet a replacement that gives us those benefits as well as fossil fuels do.

- Wind and solar are cheap, but not reliable and only moderately predictable (it isn’t always sunny or windy and weather forecasts aren’t perfect) and the requisite hardware is likely to increase in cost.

- Hydro is great, but only available in a limited number of areas.

- Storage-sized batteries are expensive and likely to become more so as the competition for the metals that go into them hots up. In addition, mining these metals is the cause of significant pollution.

- Physical storage solutions (like pumped water or compressed gas) are astonishingly low energy density compared to petrol, gas or uranium. Hydrogen might be better but needs a lot of work.

- Electricity grids, which could help counter the unreliability, aren't big enough or good enough to transmit over long enough distances (yet - that will come).

- Nuclear solves some of this, but it’s expensive and the public doesn’t like it. I also have a suspicion that if humanity doesn't love fission it isn’t going to love fusion either. Changing from ‘I’ to ‘U’ might make for a good song on Sesame Street, but I can’t see it winning over anyone who’s watched ‘Chernobyl’ on HBO recently. Yes, we all know it’s totally different and tokamaks aren’t scary at all (!) but even if Big Bird can make that sale, fusion isn’t arriving on your local electricity grid for decades.

Breaking up is hard to do, but we need to accept that and do it anyway. How?

Long Distance Relationship

You might be thinking, “But solar and wind are so cheap!” and you’d be right. Generation, however, isn’t the whole story. You have to get the power to where and when it’s needed.

Oil is extremely energy dense. Not much else competes. Solar energy, for example, has a density of 1.5 microjoules per cubic meter - over twenty quadrillion times less than oil. I think we can all agree that’s a lot - despite having no idea what a quadrillion is or how exactly a comparison like that works. Whatever. The upshot is we aren’t going to be moving solar farms around on trucks. Even if one happens to already be where the power’s needed, it’ll take up a lot of space that could have been used for other pesky stuff like housing or food.

Hopefully, we’ll solve some of this with bigger, better electricity grids that’ll mean we won’t need to deliver energy by road in future and money has already been earmarked for that project in President Biden’s 2021 US economy plans. According to a 2019 report by the Brattle group, they’ll need to increase annual transmission spending from around $15 billion to $40 billion to achieve it. Transformation isn’t cheap.

Even with huge grids, however, the future is inevitably going to hold loads of power at some times and places, very little at others. That’ll probably mean variable electricity costs for consumers. Given that renewables in the UK have already given rise to the concept of negative energy pricing (when people are paid to use power) we have to bet the price variation will be large, which will have an impact on how future electricity-consuming products are designed.

Happily Ever After

Right now, you may be thinking, all this might be a problem if I ran my own data centre, but I’m in the cloud and the whole reason I pay <INSERT YOUR CHOSEN CLOUD SUPPLIER> the big bucks is so I don’t need to worry about this stuff.

That’s not a crazy position. According to Chris Adams of the Green Web Foundation, “energy efficiency has been something of a success story for computing. Over the last 10 years, we've been able to benefit from loads of valuable digital services. And its use has exploded. But when you look at the total energy use by data centers and internet infrastructure. It hasn't really changed all that much."

As an industry our server use is going up, but our power consumption isn’t. That used to be about Moore’s Law but much of it these days is about the efficiency of cloud hosting (which can be up to 10X better than on prem).

However, the question remains: is the cloud enough?

On Cloud Nine?

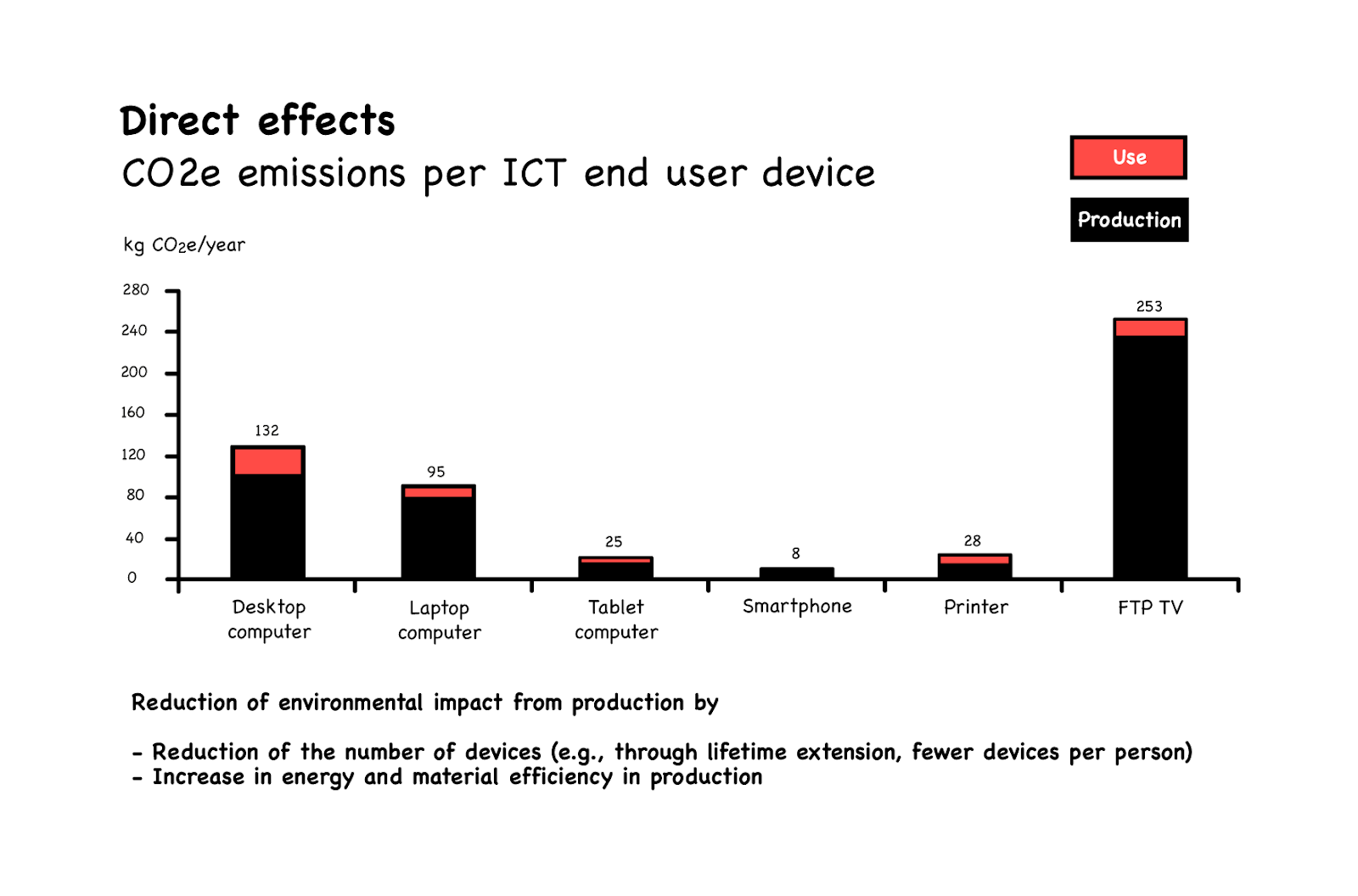

According to the European Commission, the two key climate issues facing the tech industry in the next decade are the electricity use of data centres and our hardware footprint. The good news is we’re making progress on the first through commitments like Amazon’s and the excellent and constantly improving efficiency of the cloud and cloud services.

In their Principles of Sustainable Software Engineering, Microsoft sees a climate-friendly future for tech coming down to:

- Using zero carbon electricity. (This can now be achieved by 2030 by using any reputable cloud - assuming they keep to their public commitments).

- Being software efficient. (Which can be achieved by effectively using most cloud services).

- Shifting work to times or places with available renewable power. (Again, cloud services will have to do this to meet their commitments).

- Being hardware efficient by using fewer machines and maximizing their lifespans. (Ah…)

Unfortunately, that final step is more difficult. The cloud is pretty efficient when it comes to its own hardware, but what about users’ devices?

Trash Talk

The UN reckons there is only one Sustainable Development Goal where the tech industry is having an actively negative impact on the world: sustainable production and consumption.

British households are, per-capita, one of the largest producers of e-waste. When you bin a product, you are walking away from all the energy that went into producing it and when you buy a new one, you’re pumping out a whole load of new carbon. And that’s not even mentioning all the metals.

A recent study by the University of Zurich, suggested the carbon released during the production of end user hardware devices swamps their lifetime electricity emissions.

Where Do We Go From Here?

Our tech businesses are based on hardware, which has to be built (releasing carbon) and then runs on electricity that has to be generated (releasing carbon). It’s what we do. It’s all we do.

I’m not a futurologist, but I predict a couple of things will happen between now and 2030 that will affect us:

- Hardware will get a lot more costly because we’ll all be fighting over metals (hopefully only figuratively). Plus, we’ll have to start paying for those carbon emissions.

- Sometimes electricity will be much cheaper than now and sometimes much costlier, or necessarily fossil fuel based, or perhaps not available at all. Variable electricity pricing will become the norm (as it already is in Spain) making it a new variable to orchestrate systems for. ‘Always on’ services will be expensive and carbon intensive.

Basically, life will get more complicated for the operators of data centres and folk who sell hardware.

There Are Plenty More Data Centres in the Sea

What are the cloud providers doing about this?

- As a first step, they’re building carbon-free generation capacity in the form of wind & solar farms, nuclear, hydro etc. for their DCs. This is the bit that has gone really well the past few years as solar and wind tech has become better and better.

- They’re also making their DCs more efficient. Microsoft is even doing crazy stuff like sinking them to the seabed to cut costs, energy use, and maintenance.

That’s all great. Unfortunately, however, variability of power remains an issue even for them.

The Last Dance

In theory, the answer is to physically or temporally move workloads to when and where resources are available. Think of it like a game of Tetris.

The leaders in this field are Google, who have been quietly doing something along these lines for nearly 20 years to improve their own DC efficiency. If you want to know the background, read their excellent Omega paper. Tl;dr: they rely on having very encapsulated workloads (they used an early version of container technology) that are extremely well labelled with how and when they need to be run (how important they are, how urgent, and what kind of resources they are likely to require, for example) plus loads of monitoring.

This approach has achieved resource utilisation rates in their own data centres that are more than four times higher than those in the average private DC. Although Google remains out in front, all the cloud providers now do something similar to get efficiency that kicks the butt of anything achieved on most on-prem servers.

Admittedly, delivering high utilisation is not an identical problem to handling intermittent electricity. However, you might expect folk to be able to use the same techniques to shift workloads to times when there’s available power.

Unfortunately, there’s a problem with that.

Sheer Idleness

There is an issue with only running jobs when the sun is shining (note - other variable sustainable power sources are available). When there’s no sun, your servers are sitting around with their feet up and that’s bad.

Imagine you built a data centre in the Sahara and you had loads of power during the day and none at night. All those lovely servers would only be on 50% of the time. That’s not a good use of the resources that went into making them. Servers have a limited lifespan and they take significant resources to create. You want to get maximum use out of them for the years of operation they have. Their sitting idle because there’s nothing to power them is a significant form of waste.

Stability is Important

And that’s not the only trouble. 4D Tetris is tricky. Optimising for fluctuating power availability is somewhat tough. And, TBH, I’m understating the problem. For most folk it’s too hard. If building it into your system has so many potential failure modes it would destabilise your products, you aren’t going to do it.

Even if your engineering team could cope with the complexity, if you’re going to move your jobs around in time and space, you need a mixture of characteristics like latency sensitivity (jobs that can wait vs ones that have to execute immediately) plus you need tasks that can be switched to a lower power consumption mode. Someone like Google who has a wide variety of well-understood jobs such as real-time video chat (urgent, downgradeable) and YouTube video transcoding (high cpu, non-urgent) is at an advantage vs, let’s face it, you.

Fundamentally, these problems are ones you want to leave to specialists and right now, that means cloud providers. Especially now that they have all committed to actually doing it in a zero carbon way.

Is It The End?

“If something can’t go on forever, it will stop.” - Herb Stein’s Law

In 2020, the US, China, the EU, and the major cloud providers all finally agreed: the time has come to carbon zero.

By 2030, everything is going to look very different. If the EU is right, surviving tech companies will have made two changes:

- Their data centre fossil fuel energy use will have dropped to nothing (this is already in hand as long as you use the cloud).

- Their hardware waste from phones, tablets, and laptops will have gone (this is a long way behind in terms of progress, but there are some hints for how it will be achieved).

A new world is coming and your products and systems are going to have to live in it.

Everyone already has a cloud strategy, but if your business has no strategy for addressing the hardware issue, I’m afraid you’ll fail. Even if the law doesn’t step in (it will) the current worldwide move to renewable power generation will drive up demand and costs for the metallic components of hardware. Companies whose revenue comes from frequent hardware upgrades from their customers will need to think very hard about their plans.

What might they come up with? Find out in the next post...

Previous article

Previous article