When the White House says something is a threat, many people’s first reaction is to work out precisely what it is that the president and his aides are really trying to distract us from.

President Biden issued an executive order on cyber security last year pledging, amongst other things, to protect software supply chains. This was followed just last month with a “White House Meeting” to discuss ways to specifically secure the open-source software supply chain, by securing development infrastructure and tooling, and identifying key open-source projects.

In this case, Washington doesn’t appear to taking aim at a cyber-strawman, but responding to a wave of cyber-attacks, with hostile state backing, including the SolarWinds attack.

But it’s not just the Feds who are vulnerable. Just consider the havoc caused by the discovery of an almost ten year old vulnerability in Log4j last December. Once it was disclosed, hackers sprang into action, scanning for it and releasing exploits, while admins raced to patch systems, even as further bugs in the logging tool were uncovered.

Developers were only just recovering from that holiday season mayhem, when two JavaScript packages, Faker.js and Colours.js started behaving, well, strangely. In that case it was down to self-sabotage by the developer of the two utilities, apparently in response to largely fruitless efforts to attract sponsorship.

So, it’s clear insecure software chains are a problem, and one that’s gotten worse over the last few years. According to Sonatype’s State of the Software Supply Chain report, 29 per cent of popular open-source projects – constituting 10 per cent of the ecosystem - contain at least one known vulnerability, while only 6.5 per of “non-popular” projects do.

These legacy vulnerabilities present a tempting target for malefactors, particularly those aligned with nation states. They also prompt the question, how many more “unknown” vulnerabilities are out there? From there, it’s just a tiny leap of logic for the bad guys to seize the initiative and insert their own vulnerabilities into open-source projects. Sonatype describes these as “next generation software supply chain attacks”.

Unsurprisingly, then, there was a 650 per cent increase in cyber attacks on open-source software supply chains in 2020/2021, according to Sonatype’s research. This was on top of a 430 per cent increase the previous year. The most common attacks are dependency or namespace confusion attacks, followed by typo-squatting attacks. In both cases, the attackers are relying on harried developers downloading a malicious package with a similar name to the one they actually want.

The scariest, but least common, attacks are malicious source injections where malware is injected directly into an open-source project, whether by hijacking a developer’s account, contributing code, or through tampering with tooling, to inject code into downsteam apps.

Time to reflect

Next generation or not, this is not a completely new issue. Once you start asking people about software supply chain attacks, there seems to be a 50 per cent chance they’ll mention “Reflections on Trusting Trust”, Unix pioneer Ken Thompson’s Turing Award acceptance lecture. First published in 1984, the paper asks whether one can trust a statement that code is free of “trojan horses”. He concludes, “You can’t trust code that you did not totally create yourself,” adding “Especially code from companies that employ people like me”.

But thousands of developers do choose to trust other people’s code – without knowing anything about them. As Professor Nishanth Sastry of the Surrey Centre for Cybersecurity at the University of Surrey says, this highlights the tension between “the efficiencies that you derive from relying on other specialists to produce things for you versus the robustness that derives from having multiple different implementations.”

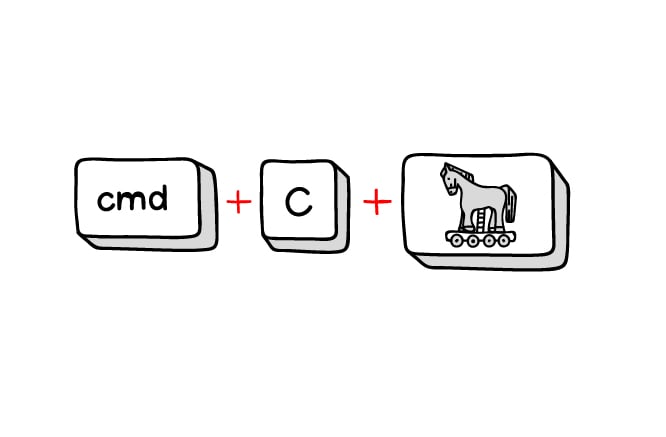

And while the debate often focuses on formal projects and repositories, Sastry points out that, “the first stop for most developers today is Stack Overflow…. copy paste coding is very, very common.” So, he continues, “If it turns out that code actually has a flaw in it, then it's quite likely that that'll affect a lot of other people.”

This is a beguilingly simple way to distribute bad code. But as Sontaype’s numbers show, the beguilingly simple methods are often all that attackers have needed, to date at least.

So, how complex do the solutions need to be?

David Wheeler, of the Linux Foundation offshoot, the Open Source Security Foundation (OpenSSF) says, “There are many things we could do, but let’s start with the simple ones.”

When it comes to vulnerabilities, he says, the biggest risks can come from the smallest projects, where there is minimal reviewing, Faker.js and Colors.js being a case in point. “One of the counter measures for a developer going haywire is having someone [else] who reviews it.”

As for supply chain attacks, he continues, typosquatting is the most common, but “As a user it’s easy to counter. Double check the name.” Likewise, checking how many times a project had been downloaded is another clue: “If it’s been downloaded ten times, it’s probably not the one you want.”

Wheeler adds, “Automation is awesome, but computers are good at fulfilling directions, whether or not that’s what you want. In the end, all the tools are not going to be as good as taking ten seconds to check before you download a package.”

When it comes to malicious injection, he says, things can get slightly overblown. “There’s this idea that people can make a change and it gets worked in. That’s not the case. The issue is can you get it accepted and make sure it’s not noticed later?”

The easiest way to get code in in the first place, he says, is to highjack a legitimate developers’ credentials. So the organisation has been working to counter this by encouraging the use of multi-factor authentication by developers.

More broadly, he says, developers should ask themselves, “What can I do?” His answer is they should work with projects they care about and contribute, and support efforts to improve security - like the OSSF itself.

That said, Wheeler says it’s a no-brainer that CI/CD pipelines should incorporate automated testing and scanning for vulnerabilities and other flaws. “Looking for known vulnerabilities is a really good idea,” he understates. As is searching for hard coded vulnerabilities such as passwords or other secrets.

Visibility is not enough

But visibility is just part of the equation, says Paul Baird, UK chief technical security officer at Qualys and former global head of cyber security operations at Jaguar Land Rover. Developers and security pros also need to be able to easily remediate problems when they are spotted or disclosed and have a gameplan to do so.

Not least, he says, because “Nine times out of 10, that bad actor is using automation, and they have potentially unlimited money, depending what type of bad actor it is.” This means, “You're always up against it. So you've got to have your ducks in a row, and be able to find these vulnerabilities and fix them quickly.”

A broader problem, adds Wheeler, is secure coding is still not being taught to developers. College courses still do not include enough security focused content, he says. Moreover, he adds, over half of developers don’t actually study their craft at college anyway. Hence the ongoing problem of hard coded vulnerabilities. “We want people to get these tools, but we want to educate people as well.”

This gets some pushback from Sastry, who says “I don’t know a computer science programme where people are not taught security”. Some sort of certification could help, but he continues, few big companies are not going to be following best practice anyway.

Certainly, the OpenSSF has launched guidelines on secure development and disclosing vulnerabilities, as well as its Security Metrics initiative, which includes a Scorecard on the security posture of open source projects and a Criticality Score, to determine their influence.

This is the first element of its Alpha Omega initiative. The Omega element is a focus on the “long-tail” of OSS projects, which will focus on identifying vulnerabilities in “at least 10,000 widely-deployed projects”, using cloud-scale analysis technology, as well as “people and process”.

From the user point of view, one anonymous CISO turned CRO tells us he sees “a huge amount of value in any kind of industry scheme that starts providing standardised due diligence data.”

More practically, they continue, “I can see a world in which there might start to be a sanitized, parallel, GitHub equivalent, that's taking a couple of versions back of software that’s had some months in the field so that you're actually running not quite ‘current [code]’ but stuff that’s had a chance to be tested.”

Turning on thousands of eyes

Even so, “With this particular challenge…I think it's quite hard to do the preventative stuff. Realistically, you're probably in a space of detect and respond. And that means if you're going to use it safely, you probably want to build systems that have limited failure modes.”

And Sastry says there are limits to the power of the crowd to keep software clean. He points to the Hypocrite Commits debacle, where researchers from the University of Minnesota submitted dubious code to the Linux kernel review process.

“This paper shows that actually, you can get things past them. You need to have defense in depth. And one thousand eyes gives you one thousand layers of depth. But there are going to be a million or a billion users, so there’s an incentive to get something past the thousand eyes.”

The big question perhaps is whether this is all enough to stop the juggernaut that is open source.

Wheeler, unsurprisingly, says “No. Software supply chain attacks don’t endanger the OSS ecosystem as long as many OSS developers and foundations act – and we are acting.”

Baird says the idea of not using open source software is “just not practical at the moment.” Though there may be a limit to the amount of formal regulation the open-source ecosystem can take, given how much project maintenance is still carried out on a volunteer basis. “It's going to cost them money and I think you're going start to see open-source projects dying if you if you go too heavy on regulation and governance.”

But it’s also worth remembering not everyone has fully committed to open-source software yet. Our anonymous CRO says that with a relatively stable, legacy estate, open-source supply chains are not currently a great concern. But they become more of an issue as an organisation moves towards a more digitally agile stance, particularly where this means working with outside agencies.

“If we started an incubator business that was starting with a blank slate, I’d be concerned about it,” they say. “If I was working for a start-up that was building new tech, I’d be really concerned.”

And as Sastry says, “The problem with security is that it’s kind of a negative property. It’s only when it’s broken that you know that it wasn’t secure in the first place. You always think of a particular threat model or a bunch of threat models, and then if something new happens, which you hadn't thought of before, then your threat model is kind of invalid anyway.”

Previous article

Previous article