If you’re looking for an example of how ostensibly smart people can accidentally mess up other people’s lives, look no farther than the case of last year’s UK public exams.

To recap: the government, in recognition of the Covid-19 threat, cancelled public exams for British students. In seeking an alternative grading methodology, the government and its education regulator arguably broke the law, either through ignorance or by choice. They seemed to lose sight of the fact that what they were doing was more than a statistical exercise: it directly and damagingly affected individuals.

How on earth could that happen? There are practical and ethical lessons to be learned for organisations of all industries in the story of the "Ofqual Algorithm” and how it went wrong.

Predicting grades

In March 2020, Gavin Williamson, the UK government’s education secretary, announced that, due to the Covid pandemic, all 2020 public exams would be cancelled to avoid accelerating the pandemic. Instead students would be awarded grades based on teacher assessment.

These exams, which include GCSEs taken at 16 (the end of compulsory education), and A-levels (used as university entrance exams), really matter.

In place of the exams, the Office of Qualifications and Examinations Regulation—the English regulator known as Ofqual—asked teachers to provide predicted grades, referred to as Centre Assessment Grades, or CAGs. Williamson’s instructions asked the regulator to calculate students’ grades based on their teachers’ “judgements of their ability in the relevant subjects, supplemented by a range of other evidence”.

However Ofqual was concerned that teachers tend to be overly optimistic about their students’ attainment, and this would lead to the awarding of higher grades than the students deserved. It also worried about a return to a previous era of widespread grade inflation. Last summer, it published an interim report warning that relying solely on CAGs would be “likely to lead to overall national results that were implausibly high. If we had awarded grades based on CAGs we would have seen overall results increase by far more than we have ever seen in a single year.”

To try to overcome this, teachers were also asked to assign a ranking for each student. The ranking was a simple number, from best performing to least, with no overlap. The rankings would be used, Ofqual stated, “to standardise judgements—allowing fine tuning of the standard applied across schools and colleges”.

In reality, as we’ll see, for the majority of students the final awarded grades were based solely on these rankings, with the teacher grades entirely ignored.

How the algorithm worked

The regulator developed a mathematical model, described in detail of the Ofqual report. The model is fairly complex, but has been broken down by Jeni Tennison, vice president of the Open Data Institute.

For example, this is how it worked for determining A-level exams: For each subject in each school where this subset was more than 15 students, Ofqual took information about the past three years’ performance, and then worked out a distribution of grades from A* at the top to U, for unclassified, at the bottom. The prediction was adjusted up or down if the students seemed more accomplished than previous years’ students, based on their GCSE results.

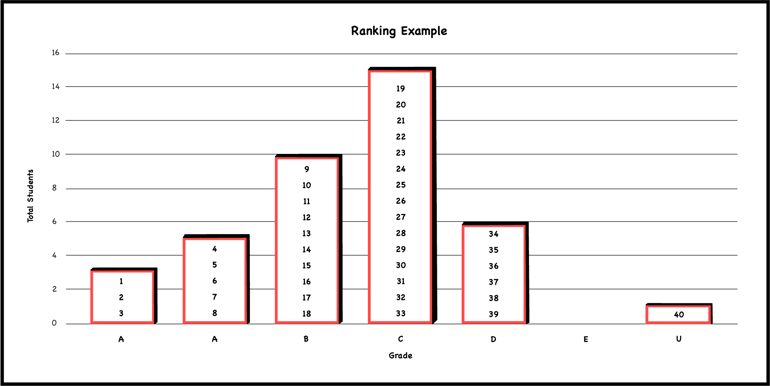

Ofqual then determined, for example, a particular school will get three A*s, five As, 10 Bs, 15 Cs, six Ds, two Es, and one U in a given subject, and used the teacher ranking to distribute the grades. In this hypothetical example, if you ranked at the top you’d get the A*—and if you ranked at the bottom, you’d get the U, irrespective of the grade your teacher assigned you.

For cohorts of between five and 15 students, a combination of the teacher-assessed grading and algorithmic moderation was used. Finally, where there were fewer than five students sitting a given exam subject, Ofqual simply used the CAGs unchanged, despite knowing that in at least some cases, the teachers’ predictions were optimistic.

The plan was to apply the same methodology, essentially, to GCSEs. One difference was that, due to a recent overhaul of these exams, there was no prior public exam data to use to correct for differences in cohort ability. That said, with larger entries for GCSE, differences would tend to be smaller anyway. The algorithmic generated results for GCSEs were never published since the government changed course before this happened.

What went wrong?

There are at least three significant problems with the approach that seem quite obvious in retrospect:

The formula didn't take outliers into account.

If you were, say, a brilliant mathematician in a school that had not produced a brilliant maths student in the last three years, you literally couldn’t get the top grade—the algorithm wouldn’t allow it. This is particularly unfair for the more academically able students in poor performing schools across the country, but the effect is broader.

Rounding the student rankings unfairly punished some students.

A second, related issue, is how the rounding was done. In the same way that it was impossible for some students to get the top grades even if they would have earned them, you could have a scenario where the algorithm determined that the second lowest ranking in the school would be a D, and the lowest a U. In that hypothetical case, you could be one ranking position lower than another pupil and drop from a D to a U, even though you were basically at the same level of achievement. You can see how this works in the following graph:

All the teachers I spoke to for this article also made the point that assigning rankings could be arbitrary. “How you are supposed to tell the difference between candidate 222 and candidate 223 and put them in an order that is meaningful, I’m really not sure,” said Tristan Marshall, a science teacher and examinations officer at London’s Francis Holland School Sloane Square.

It wasn’t just difficult for large cohorts, either. Lian Downes, head of drama at St Bede’s School in Redhill, told me, “There seemed to be no awareness of the impact that would have on people’s lives. For my A-level cohort, I only had six students. I put in an A, two Bs and three Cs, and I ended up with two Bs, three Cs and an E. So they moved my lowest ranked student from a C grade to an E grade based solely on the fact that I had to make a largely arbitrary decision.”

Tessa Richardson, head of art at Culford School, offered a similar example: “In that particular year I didn’t have any candidates below a B grade. But because for the last three years I’d always had one D grade, the algorithm said I had to have a D grade, and therefore gave my bottom ranking student, who would actually have got a low B, a D.”

Small datasets generated statistically invalid results.

With smaller sample sizes, the process would not generate statistically valid results, as Marshall pointed out.

“You have say 15 to 20 students in total, but you are then subdividing that into smaller categories for each of the different grades,” he said. “And in addition, by design, the algorithm rounds down each time—so if you have 0.99 students getting an A, the algorithm translates that to zero As, not one. I think that was particularly pernicious. It’s heartless, frankly.”

In the end, according to a report in the Guardian, about 82% of A-level grades were computed using the algorithm, and more than 4.6 million GCSEs in England—about 97% of the total— were assigned solely by it. We can’t, however, independently verify the latter statement as the algorithm-generated GCSE grades were never actually published.

The A-level grades were announced on 13 August 2020. According to the BBC, nearly 36% were lower than teachers' assessments (the CAGs). 3.3% were down two grades, and 0.2% by as much as three grades.

Legal challenges

Curtis Parfitt-Ford, an 18-year-old from Ealing in West London, approached Foxglove Legal, a small advocacy non-profit, because he was unhappy about the exam results—even though he got his predicted grades of two A*s and two As from Elthorne Park High School in Hanwell.

“The algorithm didn’t touch my grades,” Parfitt-Ford, told me, “but having gone into my school on the day I saw plenty of students who were very deeply affected. I saw students in tears because they had been marked down several grades. And there wasn’t anyone around who could explain that. Worse, on results day there was still a lack of clarity around the appeals process.”

Alongside being a student, Parfitt-Ford is the founder and director of Loudspeak, a digital lobbying system for UK progressive campaigns. “Having worked in this area I have a pretty solid understanding of data protection rules, and I suspected this wasn’t in compliance with [the EU's General Data Protection Regulation], because GDPR gives you a right to opt out of any automated profiling that has legal or similarly significant effects on you.”

GDPR formed part of the case brought by Foxglove Legal, but there were other grounds for legal challenges. Parfitt-Ford told me that another cornerstone was that Ofqual “went outside of what they were legally mandated to do, which was to provide individualised assessment”. There were also grounds to argue that the algorithm was discriminatory.

The Foxglove team got as far as sending a pre-action letter, but the case didn’t come before a judge. A mere four days after announcing the A-level grades, the regulator reversed course, accepting that students should be awarded the CAG grade instead of the grade predicted by the algorithm.

The Universities and Colleges Admissions Service (UCAS) later said that a total of 15,000 pupils were rejected by their first-choice university on the basis of algorithm-generated grades and while the decision to reverse helped, many still missed out on their university places because of it. “One of my students should have got an A, but was moved to a B,” Downes said.

“When the decision was made to reverse course it moved back up to an A but it was too late for her and she didn’t get any of her university places,” the teacher said. “By the time the grade was corrected, all the courses were full. She’s now working in Marks and Spencer waiting to reapply. This will stay with me. This is a young person who has had her struggles and has missed out on a place she was entitled to, because of an algorithm.”

Broad Lessons

The Ofqual Algorithm story is interesting in part because of what it illustrates about the dangers of automating a process that has a big impact on the lives of real people. Here are some broad lessons that can be drawn from this whole experience.

Peer review can help identify flawed assumptions.

One thing that I found very striking, when reading the Ofqual paper, was how whilst each step in the process made sense, in that you could see how you could get from one step to the next one, it was also kind of astonishing that no-one seems to have looked at the overall algorithm and spotted any problems.

Techniques like code reviews in programming go in and out of fashion but I’m personally a huge fan of them, at least in their less formal, change-based form. Done well, someone with a fresh perspective who isn’t acquainted with the code and the reasoning behind it can spot things that someone who has worked on the problem probably won’t. An internal (or, better yet, external) peer review would likely have caught some of the issues in the Ofqual case.

On software projects I’ve managed, some of the most helpful advice has occurred when we’ve brought external people in—for example, to do security reviews on the architecture and early code before too much software has been written. It seems likely, in this case, that had someone been brought in and walked through the algorithm, they would have spotted some of the edge cases and potential issues.

Bring the right people into the room where decisions are made.

I don’t know for sure, but it seems likely to me that had the team working on the algorithm been discussing it with teachers some of the flaws would have been apparent much sooner. As Richardson said, “Why would you not take this through a teacher panel first, even before coming up with a design”.

Parfitt-Ford went further: “I am not a lawyer, and I’m certainly not an education specialist or an ethics specialist. The government should have had all three of those, and all three should have been engaged in this process. I don’t know if they were, but if they were why did someone not ask if this was in the scope of what Ofqual can do? Is this compliant with GDPR? I don’t think those questions were asked in the first place, and that is arguably more worrying than the result that came out of it.”

Ofqual could have accessed the expertise of statistics specialists—but didn’t. It emerged later that the Royal Statistical Society had offered to help with the construction of the algorithm, but withdrew that offer when they saw the nature of the non-disclosure agreement they would have been required to sign. It seems extraordinary, given the importance of getting it right, that Ofqual's response was not to consider changing the NDA.

Verify the results.

When working on an algorithm—whether something machine-learning based or, as in this case, something much simpler—it is essential to be able to verify the results. Ideally, you need to be able to reference some sort of ground truth to validate the reliability of the model or algorithm you are using. Designing systems for testability influences design.

In a perfect world you’d want to be able to compare the Ofqual algorithm results against historical data to see how accurate they were at an individual-student level. The problem here is that the ranking system hadn’t been used before, and that meant that the results it produced couldn’t be validated ahead of time.

In this circumstance, another approach is to look for outliers, because they can often flag problems in data or reporting methodology. There should have been huge red warnings going off when the algorithm moved students up or down by more than a single grade. “Something has gone very wrong if students are dropping more than one grade,” Marshall said. “You don’t get it two grades wrong.”

Create and maintain psychological safety.

In addition, you need to think about whether the environment you are creating allows people to speak up. If you are a manager, then psychological safety is part of this.

In your one-on-one meetings you can, and should, create space for your direct reports to talk and you should be willing to ask questions. (“Do you have any concerns about this?”) It seems improbable to me that no-one working on this algorithm had doubts about its effectiveness. I don’t know if they were raised and dismissed, or if they were never raised. But clearly any concerns were not acted upon.

As a manager, always listen to people who are telling you things you don’t want to hear, take the time to reflect, and take action. If someone has been brave enough to raise a real issue with you, it is extremely likely that other people also have concerns but haven’t been confident enough or certain enough to speak out.

“As technology professionals,” Parfitt-Ford said, “I think it is really important to take time in the development process to think about whether what you are doing is right and equitable, and how it might be misused”.

Consider the impact on individuals.

And finally, never lose sight of the fact that your work impacts real people. “It's great to use computer technology to supplement education or any other sector,” Marshall told me, “but if you do it without regard for the individuals who you are impacting, I really think you’ve got a problem.”

Previous article

Previous article