Imagine a world where you describe an auto-scalable, fault-tolerant computer cluster in a simple declarative language. Then imagine that you can create the cluster with a single command. You can change the setup and apply it to the cluster with just another command. Sounds appealing, doesn’t it? But what does it really involve to set up a computer cluster these days? Let's say a cluster based on Mesos, a distributed systems kernel. It can be a tedious job full of manual steps, single purpose scripts and headaches. In this blog, we'll explain how we automated the setting up of a Mesos cluster with Terraform. We made it reproducible and even fun (we think).

Technologies Used

- Terraform for dynamic infrastructure configuration.

- Mesos for abstraction of resources.

- Docker for running containerized applications.

- Zookeeper for improved fault tolerance and availability.

- The Marathon framework for optimal resource utilization and task scheduling.

- HAProxy for load balancing and scaling.

We will use standard Ubuntu 14.04 as the operating system on all virtual machines. We run everything on Google Cloud.

Terraform

Terraform allows people to handle their network infrastructure as if it was code by describing resources using its own declarative language and well-documented command line tool. See full documentation for details.

Installation

Install Terraform by following the official instructions.

Terraform Mesos Module

Modules in Terraform are self-contained packages of Terraform configurations that are managed as a group. Modules are used to create reusable components in Terraform as well as for organisation of code. We have created a Terraform Mesos Module, which you can use to easily create, update and delete a Mesos cluster.

In order to use the module, create a file called mesos.tf in your project. Copy and paste the following content:

module "mesos" {

source = "github.com/ContainerSolutions/terraform-mesos"

account_file = "/path/to/your.key.json"

project = "your google project"

region = "europe-west1"

zone = "europe-west1-d"

gce_ssh_user = "user"

gce_ssh_private_key_file = "/path/to/private.key"

name = "mymesoscluster"

masters = "3"

slaves = "5"

network = "10.20.30.0/24"

localaddress = "92.111.228.8/32"

domain = "example.com"

}

The source attribute refers to the module repository. This is where the heavy lifting is done by Terraform and a few shell scripts. Feel free to explore the project codebase, fork and play with it.

For account_file , project , region , zone , gce_ssh_user and gce_ssh_private_key_file attributes, you need a Google Cloud account. Visit Google Developer Console, create a new project and set its name to the module configuration as project . Now go to 'Credentials' under 'APIs & Auth' in the Developer Console and generate a new JSON key. Save it and set its path as account_file.

Next, you need to install Google Cloud SDK and authenticate it with your Google Account (follow the instructions on Google Cloud SDK homepage). As a result of the authentication, you will receive an SSH keypair. Enter the username you created it for together with the private key path as gce_ssh_user, resp. gce_ssh_private_key_file.

For region and zone choose one that fits you best. All the available zones are listed in 'Compute > Compute Engine > Zones' in the Google Developer Console.

There are still a few attributes waiting to be filled in. Pick a unique name for your new cluster and set it as the name attribute. Choose a number of masters and slaves - these are the Mesos nodes. To achieve a fault-tolerance, we recommended three master nodes. The number of slave nodes depends on the scale of your application. For demonstration or testing purposes, you can start with two or three.

The network attribute represents the address of the subnet in CIDR.

The localaddress attribute defines the IP range, for which ports other than 80, 443 and 22 are open. Once the cluster is set up and running, you can visit, for example, port 5050 and manage Mesos from your browser, but only from the specified IP range.

The domain attribute tells the Marathon HAProxy Subdomain Bridge on which domain the services in our cluster run.

Mesos setup overview

The Mesos cluster setup we use is inspired by the great article from Justin Ellingwood: How To Configure a Production-Ready Mesosphere Cluster on Ubuntu 14.04. Justin's article helps you set up a Marathon framework running on Mesos, which is ready to run Docker containers.

Launching a cluster using Terraform

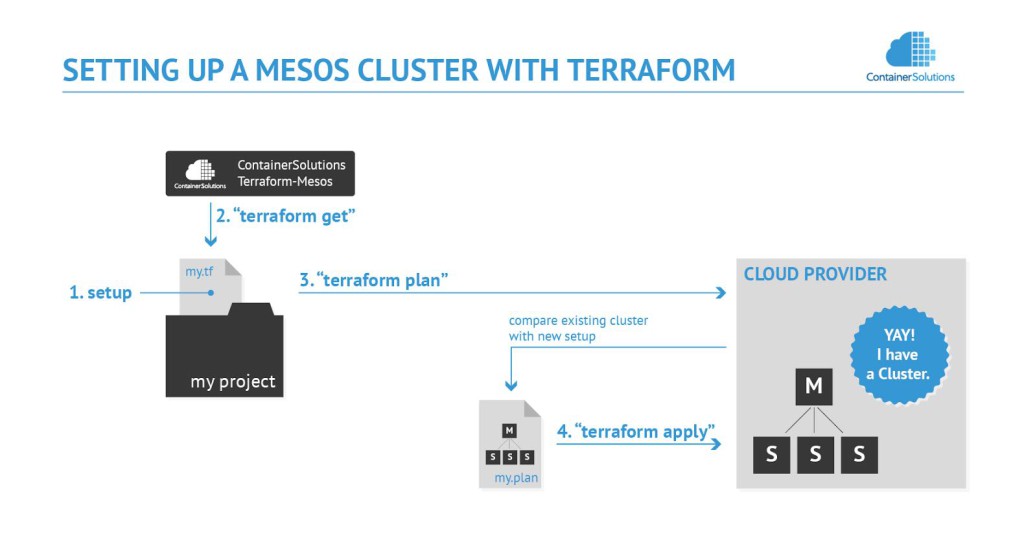

Before we can launch our cluster, we need to update the sources for the module. We do it by typing terraform get in our working directory.

When our configuration is in place and our module up to date, we can go ahead and launch our cluster. It is a two step process. First we create the plan. By running terraform plan -module-depth=1, Terraform refreshes information about the actual state of the infrastructure and resources and spits out a graph of changes that need to be executed to get the cluster to the state described in our setup.

As soon as we review the plan, we can see that some values are already present, whilst others are to be computed during the execution. That’s a normal feature of Terraform, some values can only be determined after execution of other parts of the plan.

If we like the plan as it is, we can do terraform plan -module-depth=1 -out my.plan (give it any name you like), which will record the plan to a file. Now we can execute the plan using: terraform apply my.plan.

Note: if we don’t record the file, we can still run terraform apply and if nothing has changed on the actual cluster since we ran terraform plan, we will get the same end result. Creating the file fixes the plan, so we know for sure what is going to be executed regardless of the state of the cluster.

While waiting for the plan to be executed, we can see another interesting thing. Some actions are run in parallel. This is a nice feature if we manage five nodes but a killer feature if we manage five thousand. This is technically possible because the plan is stored as a graph. The graph clearly captures dependencies of actions and safely lets us speed things up with no additional costs.

Alright. As we pondered graphs, our cluster was created in Google data centers. The cluster is now ready to run applications on Marathon. The cluster can also be scaled or destroyed - and these actions can be done from the very same command line we just used to build it. Awesome.

Image 1 - Setting up a Mesos cluster with Terraform

Next steps

We are currently working on adding VPN support to the cluster and replacing the HAProxy Marathon Bridge with Mesos DNS. Additionally, we are finishing an article that describes the implementation details of the Terraform Mesos module.

We are also looking forward to feedback from you, so please let us know your thoughts about this project, and feel free to contribute or report any issues to our GitHub repository.

Further Reading

In one of our previous blog posts, we showed how to deploy a web container on Mesos.

Previous article

Previous article