In Part 1, we discussed what can be done at the individual and team level to commit to a certain level of quality and to ensure that quality practices become part of the daily routine and are not seen as a separate procedure or someone else's problem.

It's easier to recognise when someone comes to you and tries to convince you to ignore the quality aspect of your software delivery. You can raise your shield to defend your stance when you are faced with an offence this openly. But there's a more insidious enemy just waiting to sneak in and work its mischief on your product. It's the corporate culture that views quality as a varnish on the product rather than part of the foundation. What Conway's Law dictates for the communication structure also applies to the quality of the product. The level of quality will balance with the organisation's understanding of quality. Rather like boiling frogs, it is also very difficult for people within the organisation to see a gradual deterioration in overall software quality.

It’s simple human psychology, but it’s not that simple

I have always had a soft spot for articles on human psychology, even though the field is far from my formal education. I find it quite fascinating to gain some insight into the reasons for the behaviours I observe in my profession. I am likewise always trying to figure out what characteristics of the organisation make people behave a certain way, voluntarily or involuntarily.

Henri Tajfel, a Polish social psychologist, suggested in his work on social identity theory (1979) that the groups (e.g., social class, family, football team, etc.) to which people belong are an important source of pride and self-esteem. Groups give us a sense of social identity—a sense of belonging to the social world—and we divide the world into "them" and "us" based on a process of social categorization.

Irving L. Janis coined the term "Groupthink," and published his research in the 1972 book of the same name. Janis was trying to understand why a team which reaches an excellent decision one time, can make a catastrophic one the next time. He found out that lack of conflict or opposing ideas within a group leads to reaching a decision which is not supported with enough information. He than named this lack of opposition within a group as “Groupthink” and pointed out it happens in an environment where there is:

- A strong, persuasive leadership style.

- A high level of group cohesion.

- Intense pressure from outside to make a good decision.

It is not difficult to see examples of these three characteristics in a product development organisation. Janis also described 8 symptoms of Groupthink, which are also quite common in development teams. Looking at these symptoms, one can see a pattern between them, one of them leading to another, until the team becomes a unified entity which does not question its own decisions whilst judging every decision of other teams:

Rationalisation: A member rationalises the group's decision despite evidence to the contrary.

Peer Pressure: If a person expresses a contrary opinion or questions the reasons for the group's decision, the other team members apply pressure and/or punish the person to comply.

Complacency: The team begins to feel invincible after a series of successful decisions. Their previous decisions were unchallenged and successful, so what's to prove that their future decisions will not be too?

Censorship: Individuals begin to censor their own thoughts to conform to the group's decision. Especially if they do not see other contrary thoughts, they tend to think that everyone else agrees and their idea must be wrong.

Illusion of Unanimity: When no one speaks up, everyone thinks that the decision is unanimous, whilst in reality everyone censors their contrary ideas.

Moral High Ground: Each member of the group sees themselves as moral. Therefore, it is assumed that the combination of morally minded people will not make bad or immoral decisions. When morality is used as the basis for decision making, the pressure to conform is even greater because no individual wants to be perceived as immoral.

Stereotyping: When the group becomes more uniform in their views, the group members begin to view the outsiders as people who have different and inferior morals and characteristics than themselves.

Mind Guards: Group members may appoint themselves to protect the group and the group leader from information that might be problematic or contradict the group's views, decisions, or cohesion.

Groupthink tends to be more prevalent in organisations that lack diversity (or have diversity but poor psychological safety).

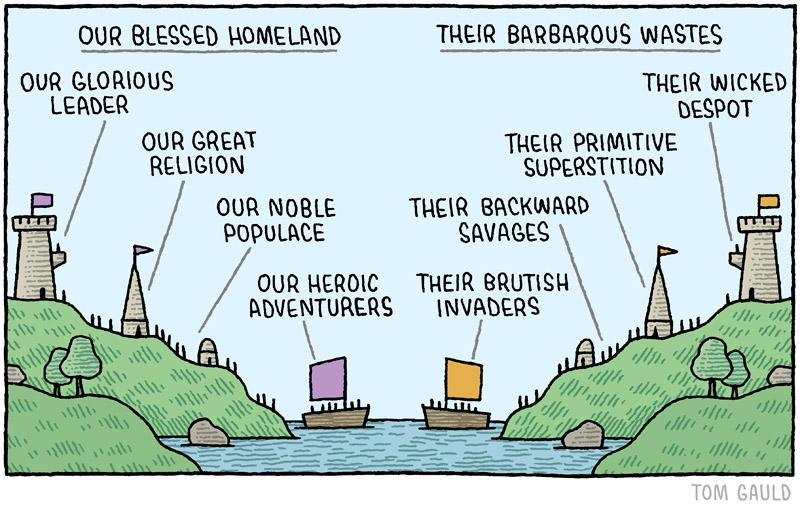

In organisations where there are silos such as separate teams for analysis, development, test/QA, and operations, each individual team tends to behave like an atomic entity and view the other teams as atomic bodies as well. In organisations, teams begin to behave like individuals and view the other teams as other individuals with their hive minds. This means that the personal behaviours or cognitive biases due to the social identities of the team members also apply at the team level, as wonderfully illustrated in this glorious cartoon from Tom Gauld for the Guardian (and used with permission):

People perceive the reasons for certain behaviours of themselves and others differently, and perceptions may also be based on the effects of the behaviour on themselves. These are commonly referred to as "attribution errors" or "attribution biases". Remember that biases are results of people's System 1 thinking, which is our brain's quick, automatic, unconscious, and emotional response to situations and stimuli. We do not even realise whether or not our perceptions are influenced by these biases.

Self-Serving Bias

People tend to attribute success to their own abilities and efforts, but failures to external factors. In a work environment, it is easiest to blame external factors, namely the other teams. How many times have you heard software engineers blame a poor system on poor management or bad marketing rather than poor engineering? The problem is further exacerbated in a company with silos, such as where you have a separate analysis team, development team, testing (or quality assurance) team, operations, DBAs, and so on. In such circumstances resentment can quickly escalate.

An incident in a production environment is rarely the fault of a single team. A bug in the code is there because the development team did not do its job properly, but it was the testing team that leaked that bug into the production environment. With a stolen database credential the network operations team should have taken precautions on the firewall to prevent attackers from getting into the network so easily; but then the development team should have known better than to keep the database connection information in an unencrypted configuration file; the database administrators should have implemented a mechanism to rotate passwords; And the cybersecurity team should have made sure that the other teams were taking these precautions in their areas of responsibility.

The point, really is that there is no shortage of "others" to shift the blame to.

Correspondent Inference Theory

In their Correspondent Inference Theory (1965), Edward E. Jones and Keith E. Davis claim that an individual tends to "take it personally" when someone inadvertently does something that may negatively affect them. We tend to think that the behaviour was personal and intentional, when in fact it was just an accident or consequence of an external event.

When things go wrong and the finger pointing game begins, teams begin to see unrealistic but personal reasons for the other team's mistakes. The testing team's failure to detect the bug that made it to the production environment can be understood as a tendency to prioritise the work of the "other development team" over "our" work, when in reality there are not enough people on the testing team to properly test both applications.

Fundamental attribution error

Fundamental attribution error (FAE), also known as correspondence bias or attribution effect, is probably the most common of all attribution errors, and no one is exempt from it. People tend to underemphasize situational and environmental explanations for an actor's behaviour whilst overemphasising dispositional and personality explanations. In contrast, people tend to overemphasise situational and environmental factors over their dispositional factors, such as character traits and beliefs, in their own behaviour.

This bias leads people to easily forgive themselves for a bad decision by justifying it with an external excuse. However, when someone else makes that bad decision, even in the same context, people find it difficult to forgive others or bring them to their senses, and they think the bad decision is the result of their flawed personality.

So what?

At this point you might be wondering what good does knowing about these attribution biases do you?

On a personal level, you may benefit from rethinking your decisions in light of these biases and figuring out whether it is your unconscious System 1 that is making that decision or whether it is your logical System 2.

At the organisational level, it is more difficult to deal with prejudice. When an incident occurs you set out to find the root cause to ensure that something like this does not happen again in the future. Your plan is to update the company's processes that led to the incident. No matter how good your intentions are, all you will get from the teams you interviewed about the incident is their skewed and twisted opinion of what happened, not objective data.

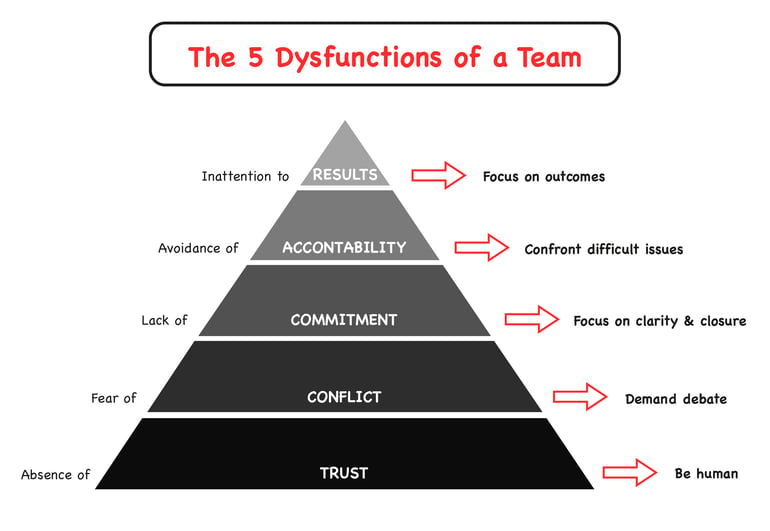

Patrick Lencioni's book The Five Dysfunctions of a Team places the "lack of trust" at the bottom of its pyramid, making it the most important dysfunction an organisation must solve above all others.

In a product organisation where everyone's actions affect the others, "blame culture" quickly creeps in, and if left unaddressed, over time these disputes become callous notions of each other and are irreparable. Preventive and proactive measures must be taken early and continuously by the organisation to maintain mutual trust among all employees.

What is a “company”—an inert entity—capable of doing?

On paper a corporation is a legal entity, nothing more. But as we have seen in the examples before, a group of people who rally around a common understanding, goal, or passion begin to act as one single body. That's what we mean when we talk about "corporate culture". And corporate culture trickles down to teams and then to individual employees. Although corporate culture is the collective culture of people at all levels, culture usually flows from the top down. As leaders your behaviour—that is what you do—has a much greater influence on how other employees behave. Behaviours by top management that contradict the advertised company culture weaken the beliefs of all other employees.

The Oracle Cloud incident

I worked for a company as the head of architecture. This company developed extensively with Microsoft .NET and used SQL Server as the backend database. We had some workloads running on Microsoft Azure (which was the obvious choice) and specifically used PaaS App Service for our application and DBaaS MSSQL service for our databases. It was a bad time for the Turkish economy and the Turkish Lira (the currency the company earned) was depreciating against the US Dollar (the currency our cloud services were billed in) meaning that bills were increasing month over month.

Those were the good old days when Larry Ellison was reputedly saying "Maybe I am an idiot, but I have no idea what anyone is talking about. What is it? It's complete gibberish. It's insane. When is this idiocy going to stop?" about cloud computing.

Nether-the-less Oracle approached us and offered to fix the exchange rate for 12 months if we guaranteed a certain amount for the whole year. We, the architecture team, ran a PoC with Oracle Cloud and found that it lacked a PaaS application service that could handle .NET workloads, as well as a DBaaS service for MSSQL Server.

We reported back to management that Oracle Cloud was not an option for us because we already had our own data centre and the main advantage of the cloud for us was the use of platform services and the associated easy scaling of resources based on expected utilisation. Nevertheless, upper management decided to settle for Oracle's offer because their goal for the year was to reduce expenses. The result was, predictably, a disaster, as Oracle struggled to deliver our cloud account for several months, after which teams struggled to move their applications to the new platform.

Alongside my work as architect I also acted as an agile coach because the company was doing an agile transformation and I was selected as the Scrum master of the team leading the transformation, which consisted of senior and mid-level managers. We were doing sprints where we were making organisational changes, but the impediments we were facing were difficult to remove without C-level intervention. I cannot say that the CEO was not supportive; after all, he was the one who signed off on all the expenses, and he was present at the first training session for the entire two days. But he never attended our sprint reviews, to which the entire company was invited, and the CEO is the most important stakeholder of all.

My team asked me to coach him and to make him more visible. I spoke with him and he readily agreed to hold a weekly session for us.

In our first session, we talked about commitment, and I told him how important it was that he be present at our events to encourage the entire company to commit to the transformation efforts. He attended our next review meeting, but it backfired because everyone who would normally ask questions and criticise our efforts were very quiet because he was there. When I later interviewed some of the attendees, they all told me that they were hesitant to criticise or ask questions because they thought we would not want to be shown up in front of the CEO.

In our second session, we talked about Openness, and told him to be bold and open in front of everyone and expect them to be open. I offered the CEO the chance to publicly admit that upper management had made a mistake by opting to use Oracle Cloud, and to show everyone that you have learned from it and take remedial action. I also admitted my guilt as the head of architecture to let this happen, and promised to back him up. But he feverishly denied my request and continued to assert that their decision was correct, accusing me of not knowing the company's finances. Still to this day, I find it hard to understand how a decision could be good to pay an amount, however small, for a service you did not use.

The following review, the main subject of the meeting was openness and courage and I talked about a plan we devised to empower these two of the five values of Scrum. We introduced a badge (gamification was an important instrument we used to have people get on board more easily) which people who come out admitting their failures would get and carry with pride. I naively waited for our CEO to come out and get the first badge to no avail.

A few months later, the company consulting for us on agile transformation left, citing our lack of commitment, and the transformation effort stalled at that point, leaving the zombie Scrum teams with no real agile mindset. The contract with Oracle was also not renewed at the end of the initial 1-year period. And no one got the courage and openness badge at least for the time I kept working for them.

At the review meeting, I decided not to come out admitting my failure to stop the decision I deemed wrong, because it would eventually turn into blaming the others involved, without their consent. By that time, I also kind of knew that my time with the company was coming to an end. To this day, I still wonder what would have happened if I didn’t hesitate to come out and somehow regret that I may have missed the chance to actually transform the company.

Even though this story is not directly about quality, it shows how the behaviour of upper management affects the corporate culture and the behaviour of all employees in the company.

To unit test or not to unit test

At the next company I worked for as an architect, my primary role was to lead the Cloud Native transformation of the financial software suite. As an avid advocate of quality and testing, one of the first things I did was review unit test coverage. I was taken aback by the results, as there was virtually none. I then interviewed the lead developers on the various development teams and got their opinion on this situation. Every single one of them told me that they would like to develop tests because the manual test cycle was taking too long, there were a lot of incidents in production, and they were not happy with the current situation. But the code was quite old, and there were methods with two thousand lines of code that were not testable at all.

I approached the CEO of the company and told him that both the Cloud Native transformation and the quality efforts alone would not do anything in the short term and that there needed to be a long-term plan to overhaul the entire system. I advised him that we could use the strangler pattern of taking some code out of the legacy system, writing it as separate services using .NET Core (the legacy code was in .NET framework, which does not run on Linux and therefore is not suitable for containerization unless you are crazy enough to use Windows containers!), and adding their unit tests and integration tests to make sure the pieces fit correctly into the overall system.

This approach would take time, but would end up solving all of our problems at once. I even presented him with a plan of where to start, when we would see the first results, and so on, and I thought I had convinced him.

Later in the week, at the all-hands meeting, the CEO announced my findings and stated that he expected each developer to develop at least one unit test function per week, and that I should produce a report showing who did and who did not develop one unit test function at the weekly all-hands meetings. He also mentioned that this metric would also be used in performance reviews.

This was exactly the opposite of what I was advocating. Pointing fingers or bribing people to do something they do not even think they need to do never leads to a solution. It has also shown all developers that the CEO knows nothing about unit testing, as he measures it by the number of functions, not their coverage.

What happened next blew my mind. Over the next week, every single developer committed multiple unit test methods to the code base. So the all-hands report shone brightly and was a perfect example of a company committed to quality. But the coverage metric did not move one bit. When I looked, I found that many of these test methods were blank and did not in fact test anything.

Culture trickles down from the top, and in this case what the CEO was asking for was not real; he had no patience for a real solution. He patched up the acute problem instead of curing the chronic one. And the staff delivered exactly what he asked for.

It’s Not Just The Money We’re Talking About

The examples I gave above resulted in a waste of money and time for the company, only hurting the company and its shareholders. But unfortunately, the lack of corporate ethics or commitment to the quality of their products often led to even more serious problems. In Part 3, we’ll look at some cases where a lack of ethics had wider implications.

Previous article

Previous article