This article is the conclusion of a three-part series.

In Part 1 of this blog series, we explored the bare-metal world and tools that help to bring its provisioning closer to the clouds. We introduced a tool from Packet called Tinkerbell, which takes the best from DevOps practices and applies it to the servers bootstrapping.

Part 2 was focused on building our own homelab/mini data centre using Tinkerbell. We showed the ease of building a personal Kubernetes cluster and connecting it to the managed DevOops lifecycle platform Gitlab.

In the last part of the series, we will focus on the paradigm that connects both worlds: clouds and on-premises. We will talk about hybrid cloud. We will end up by integrating our homelab with Google Cloud Platform (GCP).

One of the unfulfilled dreams of cloud computing is seamless integration between on-premise and cloud resources. The hybrid cloud idea has been around for some time already. Even though the term was not invented by the users, but rather the vendors, it stuck around. The idea of managing resources, the ones hosted in the private data centre and in the public cloud, through a single interface is very tempting and attracts lots of attention from enterprises.

There are multiple reasons why anyone would consider having their infrastructure spread across multiple cloud vendors:

- Theoretically infinite scaling possibilities. If we go ‘hybrid cloud’, we can benefit not only from on-premises data centre resources, but we can also use the resources hosted in the cloud and scale the computing power according to our needs, without looking at physical limitations of our data centre or the hardware vendor’s time constraints on delivering new machines.

- Increase availability. By extending our on-premises data centre with the cloud, we are gaining another site, or even multiple sites, which can be used in the event of a data centre outage.

- Reduction in expenses. By going hybrid cloud and rearchitecting business applications, we can benefit from the cloud offerings: managed services that in turn can be used to reduce and optimise costs.

Of course, in order to fully benefit from such architecture, a lot of organisational and business changes are required.

GCP Anthos

At the moment, each of the big cloud players has its own solution for building hybrid cloud. Google Cloud Platform announced last year a dedicated-to-hybrid cloud platform called Anthos. Its main goal is to achieve consistent experience, whether you are deploying your application to on-premises or cloud environments.

The important thing is that Anthos is able to work with any cloud and not only GCP. It adds an abstraction layer (Anthos GKE) to manage Kubernetes installations where the intent is to deploy applications. Anthos GKE can be used whether as a managed service in the GCP or installed directly to the on-premises.

The Homelab in the Cloud

Since we have already provisioned an on-premises cluster, I thought it may be a fun challenge to actually connect the RPi homelab to managed Anthos GKE and try to administer it from there.Even though it sounded like something challenging, it was not. The connection of an on-premises cluster to the cloud is pretty straightforward and does not require any special skills. It is enough to follow the instructions from the documentation.

The only concern is that software used for connecting clusters to Anthos is written for x86_64 architecture. Since this software is not open sourced, it is not possible to recompile it for arm64. So the only way to attach our cluster to the GKE was by adding a new node to the RPi cluster, this time a virtual machine with x86_64 CPU architecture.

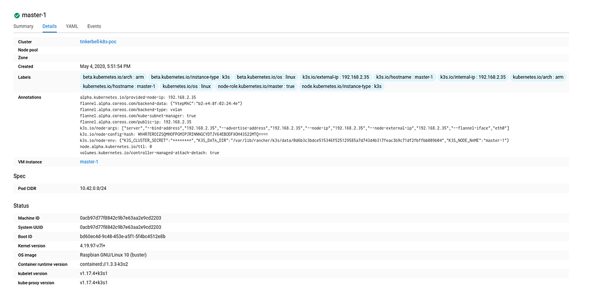

Luckily, it was no brainer with Tinkerbell and its workflows. Once we added this node to the cluster, and forced gke-connect pod to be deployed on x86_64 node, we could see in the matters of minutes that it is visible in the Google console. (If you are reading this on mobile, you can download a bigger version of this image here.)

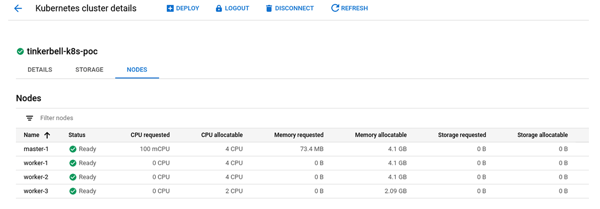

All the worker nodes were visible there as well (download a bigger version here):

Once the cluster was added to the Anthos GKE, we could see metrics for all the nodes in our cluster. We could deploy Kubernetes resources using the standard GKE web interface. It was also possible to deploy products from the marketplace after installing it in the cluster. Unfortunately none of the applications from marketplace has offered support for arm64, so there was not much use out of it.

Open Sourcing Sysadmin Knowledge

The bare metal has been and always will be here, it didn’t go anywhere, even though the cloud vendors would like us to think that. The only problem is, it never seemed to be a suitable friend for developers. Only a privileged caste of sysadmins in their caves had the secret knowledge of how to tame those machines.

Luckily the world moved forward, and tools like Tinkerbell put some light on the secret knowledge of bare-metal provisioning. Even though the entry level for Tinkerbell is relatively high, we can imagine that in the coming months, as the tool gains visibility and popularity, and builds a community around it, more custom generic workflows will be available and there won’t be a need to write one without having a really special need.

And who knows? Maybe at some point Packet will add a hub of workflows so that developers can share their work with each other, like the DockerHub.

End of the Journey

In this three-part series, we learned about the past and future methods of provisioning bare-metal servers. We discussed the tool for bare-metal provisioning that follows the best practices from DevOps world: Tinkerbell. We put this tool into practice by building our own homelab based on the Raspberry Pis and creating a workflow which deployed a Kubernetes cluster in the matter of minutes from powering it on.

Next, we made a practical use of this cluster, by installing a Gitlab runner and executing a pipeline from the Gitlab.

We finished our series by introducing the concept of hybrid cloud and actually connecting our homelab to one of the big cloud providers, GCP. We touched so many different concepts and ideas during this journey that I really hope you didn’t get lost in the middle and enjoyed it.

All the code used to create a homelab from this series of articles is available here.

Previous article

Previous article