In the last two months, I've worked together with Chef (the company) to evaluate Habitat from a cloud native developer perspective. This is the first blog in a series of three where I'll share my experiences. The other two blogs will be about how to run the Habitat supervisor on top of Kubernetes, and how to create a Highly Available Redis cluster with automatic failover on top of Kubernetes.

What is Habitat?

Habitat is a new product by Chef that focuses on Application Automation. Its goal is to solve both building software from source, and providing a supervisor that can perform basic runtime (re)configuration. The artifacts produced by Habitat contain both the packaged software and a supervisor. Their analysis is that runtime automation is critical to run a distributed application, and therefore must be included in how the application is packaged. Separating the management of the configuration from packaging makes it harder to get right.

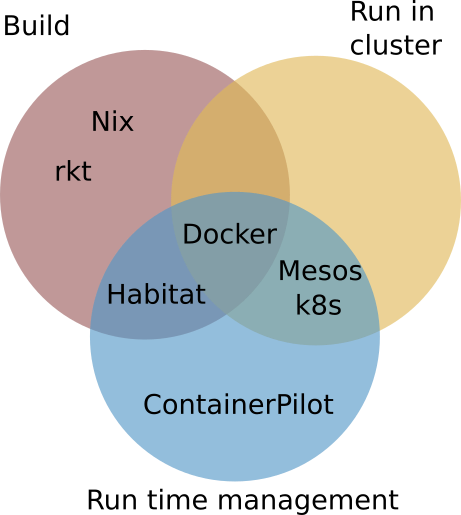

As I wrote in my previous blogpost about Habitat, this makes them target a unique segment of the market that no other project is attempting to fill.

In this blog, I'll explain the model behind the build system in Habitat and share my experiences of how it holds up from a cloud native developer's perspective.

Graphs: the model behind Habitat

In traditional Linux deployments, people would create .deb files (or some other deployable artifact) for their own applications and use a configuration management system such as Chef, Puppet or Ansible to install them. This limits which versions of installed libraries can be easily used to what is provided by the operating system. Software that uses older or newer versions of libraries must be patched to work with the provided version, or the packager must take extra steps to make the non-standard version available to this snowflake program. This introduces friction to the process of moving application code from a developer's machine to a production environment.

Container runtimes, such as Docker, provide an escape hatch for developers to solve these dependencies themselves. However, this often results in build artifacts that are very hard to audit for security purposes. There are several (commercial) offerings that try to remedy this problem by scanning the container images after they are built for security issues, but just like anti-virus software that scans for known viruses, this is a stop-gap measure that does not fundamentally solve the problem. Furthermore, if you have several different container images installed, they might contain exactly the same versions of packages multiple times, but due to the limitation of the layered image model used in most of the current container runtimes, they would be stored multiple times.

Habitat takes a different approach. Instead of forcing the whole ecosystem of applications to use one globally installed version of a library, it introduces choice. An application developer can specify exactly which dependencies, including their versions, she needs to make the application work properly. One can specify versions for all dependencies; this is even the case for which version of glibc, the main interface between the kernel and user programs, is used.

Each Habitat package has a list of its dependencies that is stored in a plain text DEPS file. Not only that, but it also has a TDEPS file, which lists dependencies of dependencies (of dependencies, etc); the transitive closure of dependencies.

The effective result is that applications have a dependency graph that can be inspected by external tools. This same model is also used by Nix. The build tools Buck (by Facebook) and Bazel by Google, have this same model, but only focus on building software. The potential of having a graph model is enormous; in Nix there is a tool called Vulnix that automatically downloads the Common Vulnerabilities And Exposures (CVE) database and is able to check if an application contains a dependency on a package with a published CVE.

When you install a Habitat application, Habitat will first download the application artifact, and then reads the TDEPS file in the artifact to see which dependencies it needs to install, potentially reusing them if they're already downloaded to your machine. This often results in having less bytes transferred between your artifact repository and your production machines, which will make deployments faster.

Experiences with building software with Habitat

Packaging an application in Habitat involves writing instructions to build the software, and how to manage its configuration during runtime. I'll only touch on the build instructions in this blog post.

From a cloud native developer's perspective, Habitat packaging must be at least comparable to the ease of development that Docker provides. I think that Habitat is not exactly there yet, but has the potential to be a lot easier to use than Docker.

The build instructions are stored in a plaintext file called plan.sh, a shell script which must expose some shell variables and functions to interact with Habitat's build system.

Although for most ops people bash is their natural habitat (pun intended), a lot of developers only have a basic understanding of shell scripting. Personally, I know enough bash to write simple programs, but feel it's way too easy to shoot oneself in the foot. Therefore I believe that Habitat should provide sufficient build environments for most programming languages in the form of build packs.

Development of packages happens in a Studio, which is a chroot that only contains software installed by Habitat. This Studio is an environment that is fully isolated from your host operating system.

Building a process with habitat consists of executing several callbacks, which make sense for C/C++ based applications, but less for e.g. a Java, Ruby or JavaScript application. I won't name all these callbacks, but often I had the need to override several of these callbacks by empty functions.

For a ruby application, the steps that you need to take to build it are basically set in stone: just run bundler install --deployment. At the moment, it requires 120 lines of bash to install a rails application with Habitat. To be fair, the first half contains metadata whilst the rest contains the callbacks to make ruby and rails work from within Habitat. An equivalent Dockerfile has 8 lines of code.

I've discussed this with Chef, and we concluded that build-pack like solution is needed to reduce this overhead. These buildpacks should be smart enough to reduce the boilerplate to zero for all practical purposes.

Ideally, we'd end up in the following situation, which also contains 8 lines of code, but each one is declarative, telling habitat what to build, not how to build it, as is done in the Dockerfile.

pkg_name=ruby-rails-sample

pkg_version=0.0.2

pkg_origin=delivery-example

pkg_maintainer="The Habitat Maintainers <humans@habitat.sh>"

pkg_license=('mit')

pkg_source=https://github.com/jtimberman/ruby-rails-sample/archive/${pkg_version}.tar.gz

pkg_shasum=b8a75cdea714a69a13c429a1529452e47544da7c10f313930b453becd0d2ef20

pkg_build_pack=core/buildpack-ruby

Once development is finished, a package can be built in a clean studio with hab pkg build, which will create a .heart file: the build artifact. This artifact can then be uploaded to a Depot -which stores artifacts- to make it available for installation on the production environment.

Final remarks

There are quite a few rough edges in Habitat. One of the more trivially fixed ones is that it insists on re-downloading packages over and over again during hab pkg build to ensure that the created studio is not contaminated. This easily adds 30+ seconds to the build time, which in my opinion is a bit silly. It's fine to (try) to contact the network to see if a package has updates, but in 99% of the cases, a global cache of heart artifacts will reduce the hab pkg build time significantly.

A more complex issue is the fact that a plan.sh file and final package have a one-on-one correspondence, like how a spec file would correspond to just one RPM package.

In other systems like Nix and Bazel, this relationship is n-m; one file could generate multiple packages, or even none at all. In those systems, packages can also be used to create partial results that can be easily re-used. For example, this allows them to fetch sources files or git repositories just once, reducing build times significantly.

I really like the graph model behind Habitat, and their supervisors. The build system can be made better than Dockerfiles by having build packs that help people declare what they want Habitat to build instead of how it should be build. The one-plan.sh to one-package constraints is a bit unfortunate. On the positive side, it makes the whole system easy to understand and reason about.

Previous article

Previous article