I recently came across a fun and interesting challenge. In the latest project I joined, a team wanted to do a "lift & shift to the cloud". As a Cloud Native engineer I've seen this pattern fairly often, although it never means quite the same thing.

Set up

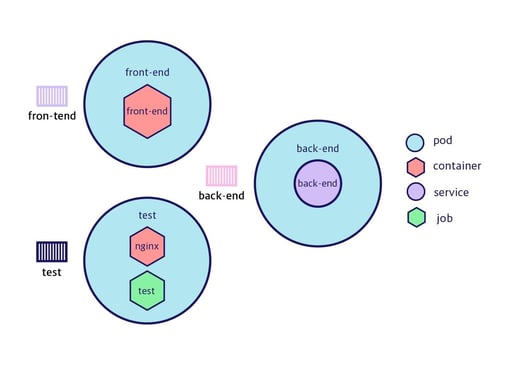

In this particular case, the team had 3 smaller, specialist, sub-teams building and testing the app: one took care of the front-end, another the back-end, with a third in charge of writing the tests for both layers.

If you're familiar with Conway's law, you’ve probably already figured out the architecture of the app: a thin front-end app built using React/Redux and cool UI controls; a back-end built in Java using Spring Boot; and a third app built in C# (Framework 4.8) that executed the tests against a test environment through a browser using Selenium as the driver. They each had their own code repository, and used Azure pipelines to build their code. The applications were running on an on-prem server (physical machine) where the team would copy the binaries using a script (manually executed). They had been experimenting with containerisation of their front- and back-end applications and they were planning on starting to run them on containers since they were also planning on upgrading the Java runtime version of the back-end..

Another regularly seen pattern is "since we're here...". The client wanted to bring their Docker images into a managed Kubernetes cluster. The IT department already provided a multitenant (namespace based) managed Kubernetes cluster via Amazon Elastic Kubernetes Service (EKS), so we could easily deploy what we had so far into said cluster.

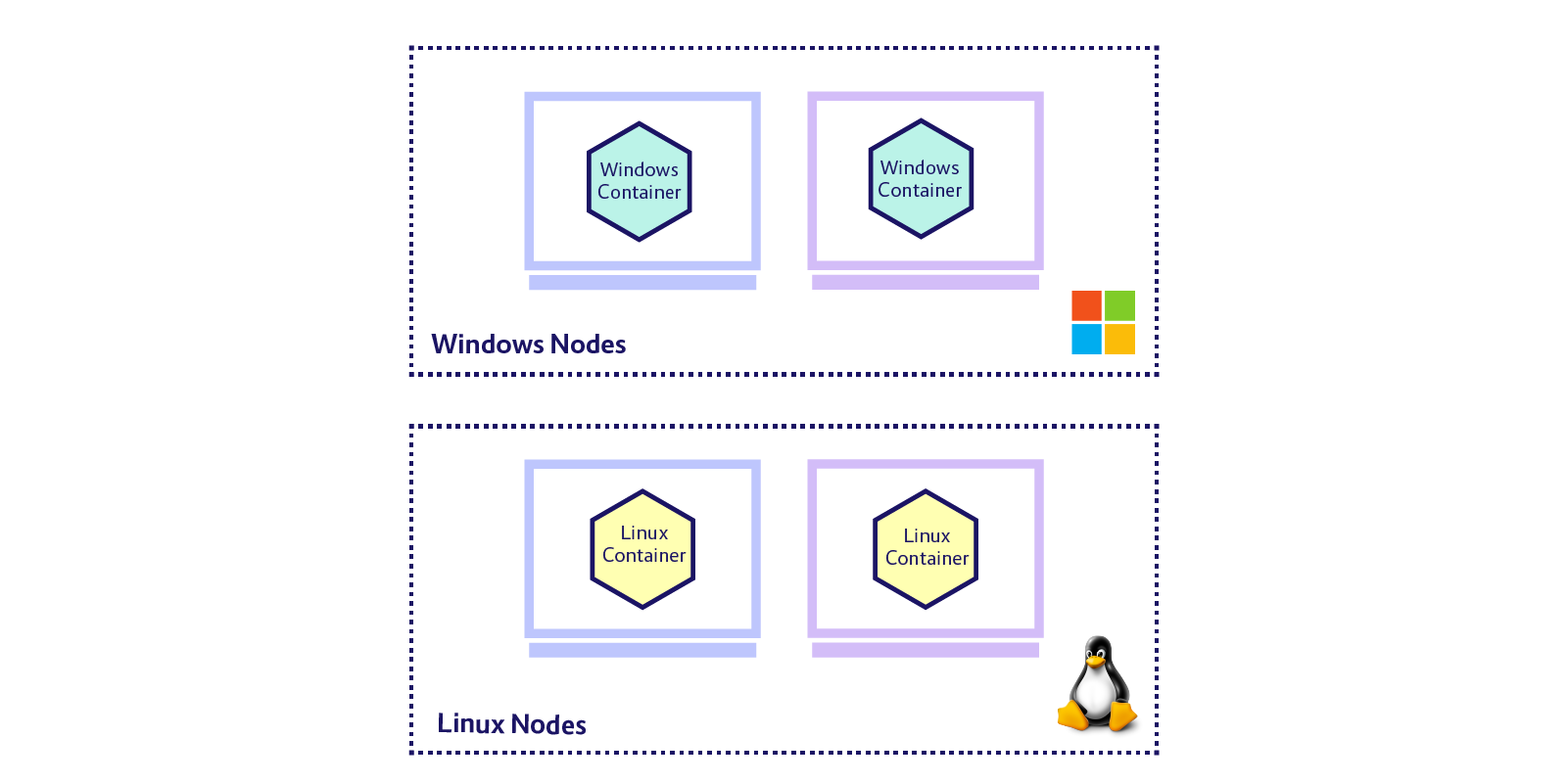

But what to do with the testing app? Keep in mind they were using Microsoft .NET framework 4.8, the last living version of the Windows-only flavour of the platform. I had done some work with Windows Containers, but the team hadn’t, and one of the challenges was that the UI testing app needed to start a browser in order to test the application.

Buckle up

So, first things first, we needed to build the application inside a container. In order to do that we started by using Microsoft's base image mcr.microsoft.com/dotnet/framework/sdk:4.8. I personally prefer working with powershell inside Windows so I also changed the default shell to powershell: SHELL ["powershell", "-command"]. We then copied the application inside the image and ran the build commands. The base image already has all the build tools, so I knew that nuget (.NET's package manager) and msbuild (Microsoft Build Engine) were there.

With the test app built inside our Windows Container, I needed to use a multi-stage dockerfile in order to also run the app. Again, keep in mind I had to find an image where our app could spin up Microsoft Edge in order to use Selenium to successfully test the front-end application. Was that even possible? To my pleasant surprise, it was. Microsoft provides an image with Edge and a msedgedriver installation to be used with Selenium WebDriver, which is exactly what we needed. So now all I had to do was copy the compiled binaries into this new image and that'd be it, right?

Wrong (of course). The image didn't have the runtime the test application required to run. I tried installing it with Chocolatey but even that wasn't there. Luckily Chocolatey is easy enough to install and very helpful when you need to install software on Windows environments. So, after installing Choco, I used it to install both the .NET Framework 4.8 runtime and .NET 5.0. Why .NET 5.0? Because it's a lot easier to run tests with .NET 5.0, just run the dotnet test command and .NET will magically find your test library and pick the right target environment—in my case, the good 'ol .NET Framework 4.8.

Ok, so Docker was ready, but could we run it? Of course! Amazon EKS provides the option to have Windows nodes in your cluster, so it's just a matter of using the right well-known labels, annotations and taints.

We now had the right container running our tests, but we were now facing another issue. The container had been prepared to run as a job. When it started running, it would run the tests and it would be terminated after the tests finished executing.That led to a problem since the tests results are generated by the dotnet command on a file in the local file system, which was impossible to retrieve after the container had been terminated.

To solve this we created a job that would run the specified container using shared volumes in the cluster, in order to side-car another container with Nginx exposing the generated test results. A simple yet effective solution.

Wrap up

If you're old enough, you probably recognize the phrase "I love it when a plan comes together". We all love it when things work as expected, even better when the path was not what we had initially planned.

By the end of the iteration with this customer the results were so satisfying. We had a managed cluster (AKS) which is great when you don't want to worry about some things (and decide to worry about others), with both Linux and Windows nodes, where we could successfully deploy their application, run the test when needed (triggered manually or by a Jenkins pipeline) with the latest test results alway available as a static web app.

There's a beauty in solving hard problems with simple solutions, right?

In a single cluster with some Linux and Windows nodes: our application with its two deployments; their respective services: and our testing job and the Nginx pod with a service that exposes it.

Previous article

Previous article