Managing secrets in Kubernetes can be a cumbersome job. In a multi-service multi-environment setup, you can end up with hundreds of secrets without even noticing. It is hard to keep track of everything and at the same time manage secret rotation, onboard new services, and onboard new people with correct access permissions.

There are a lot of existing solutions to manage these problems, like AWS Secret Manager and Vault. These solutions typically have features far beyond what we have in stock Kubernetes. And there's a good chance your organisation is already using a solution like this that they're happy with. So a lot of people have thought, "Why can't I use this with Kubernetes?"

This has led to an abundance of projects for syncing secrets from cloud services and injecting them into Kubernetes secrets.

With this blog post we want to show you the popular projects in this space and explain how a community has formed over the new external-secrets project.

TL;DR:

Brief history of our new External Secrets Operator:

— Lucas Severo Alves (@canelasevero) February 26, 2021

- Godaddy has the popular kid in this space, with 1.5k stars

- For some reason a lot of similar solutions popping out evrywhere

- https://t.co/9Aq1A7Vvg5

- we got together and considered merging

🧵👇

Repo link: https://github.com/external-secrets/external-secrets

The most popular kid in school

GoDaddy's kubernetes-external-secrets (KES for short) is the most popular external secrets sync solution, with more than 1,700 stars on GitHub, and a vast array of users. This solution is stable, with great community engagement and active maintainers pushing improvements frequently. A big reason for this solution to have gained traction is that it can be easily extended to support any external secrets provider that you would want to use.

Currently it supports the following providers:

- Alibaba Cloud Secrets Manager

- Azure Key Vault

- Google Cloud Secrets Manager

- IBM Cloud Secrets Manager

- AWS Secrets Manager

- AWS System Manager

- And Hashicorp Vault

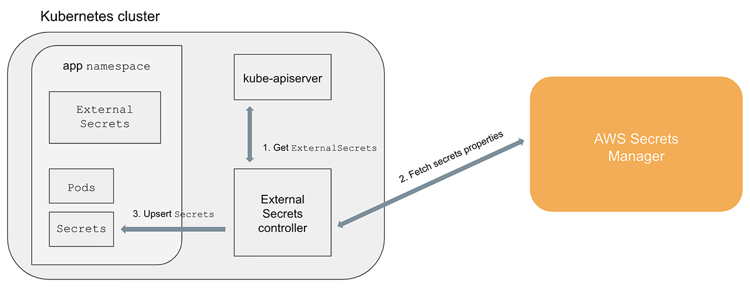

To get more insight into how KES works, you can have a look over this blog post, “Kubernetes External Secrets”. But in a nutshell, like all solutions that we described here, it reads secrets from external providers and syncs them into native Kubernetes secrets.

KES seems like the perfect candidate for being the de facto way of dealing with external secrets, considering that it supports all the important providers, has a great community, and is actively maintained, right? But it has a problem: it was developed in JavaScript.

There is nothing inherently bad about JavaScript, but in this use case there are some big advantages to using Go instead. The main reason being the first-class SDK support for Go in Kubernetes. Another reason is tooling: with Golang, we can take advantage of Kubebuilder or Operator SDK to help us bootstrap and follow Kubernetes Operator best practices and standards.

Some of the other kids

The interesting thing about working on a problem that annoys you, is that sometimes this problem also annoys a lot of other people. But sometimes people start new projects to solve their problems instead of improving an existing project. Sometimes it is because of a very specific requirement or just not being aware of another project with similar goals. We want to describe some other similar projects here in this section.

Container Solutions External Secrets Operator

This project has 182 stars, it is mentioned in the ArgoCD documentation, and it was created by Riccardo M. Cefala because of a specific client demand inside Container Solutions. It was written in Go, and it was one of the candidates that could have been the base of the new central solution. It supports GCP SM, AWS SM, Credstash Gitlab CI Variables and Azure Key Vault. More about the project can be found on the project's Readme. Container Solutions is also helping kick off the new central effort with both engineering time and borrowed infrastructure to enable e2e testing.

Itscontained Secret Manager

This project has 36 stars and was created by Kellin McAvoy and Nicholas St. Germain. This project was especially interesting since it was created to comply with the new common CRD discussion (more on that later). It supports AWS SM, GCP SM, and Hashicorp Vault. It was also one of the candidates that could have been the base of the new central solution. More about this project on its Readme.

AWS Secret Operator

Another relatively popular project, with 220 stars and created by Yusuke Kuoka. This one is provider specific, supporting only AWS, and not being extensible to other providers. It is one of the easier to use options available, and being provider specific simplifies its code. More about the project on its Readme. This was also the project that sparked the initial discussion that we will talk about in the next session.

The get together

In the previous section we talked about how similar projects can start popping all around, and in our case we noticed that when this issue started to get filled with other similar solutions.

An issue that was created because of a single similar project started scaling; a lot of other projects basically do the same thing. To list a few (sorry if we missed mentioning one of you, contact us and we can give credit here 😀:

- https://github.com/ContainerSolutions/externalsecret-operator

- https://github.com/itscontained/secret-manager

- https://github.com/mumoshu/aws-secret-operator

- https://github.com/cmattoon/aws-ssm

- https://github.com/tuenti/secrets-manager

- https://github.com/kubernetes-sigs/k8s-gsm-tools

- https://github.com/godaddy/kubernetes-external-secrets

Having a lot of similar solutions popping out everywhere is a great indicator that this is a problem worth solving, with a lot of interest from the community. With that in mind, we considered merging efforts, and because of the reasons mentioned in the previous section, we decided to make the new rewrite use Golang and Kubebuilder.

Container Solutions and Itscontained both had projects in Golang that could have been great initial code bases (externalsecret-operator and secret-manager) for the new rewrite. We decided in the end to start from scratch and just copy and adapt code that made sense in the new central solution.

Getting the party started

The initial step in the merged effort initiative was to come up with a common Custom Resource Definition (CRD) that aligned with every use case that we could think of. Moritz Johner took the lead and came up with an initial draft CRD proposal; with reviews from the community we adapted the CRD to comply with our requirements.

Here’s the first version of the SecretStore CRD after the discussion:

|

apiVerson: external-secrets.k8s.io/v1alpha1 |

Here’s the first version of the ExternalSecret CRD after the discussion:

|

apiVersion: external-secrets.k8s.io/v1alpha1 |

With the CRD proposal finished, we created a new company-neutral organisation and started a new project under it. Here we started bootstrapping the project using Kubebuilder and Jonatas Baldin started implementing the initial reconciliation loop logic. We also moved the CRD spec to a new repo.

GoDaddy agreed to transfer the original KES project to the new company-neutral organisation, and it is living here. This is nice because, when everything is ready with External Secrets Operator (ESO) we can use the external-secrets/kubernetes-external-secrets stars to make the new rewrite as popular as its sibling

Introducing External Secrets Operator

With the CRD, language choice, and initial reconciliation loop out of the way, the new central solution was born. The first pre-release ships with support for AWS Secret Manager, AWS Parameter Store, and Hashicorp Vault providers. We also launched a simple website with documentation at external-secrets.io. This release was made possible because of the great work done by Moritz Johner, Kellin McAvoy, Jonatas Baldin, Markus Maga, Silas Boyd-Wickizer, yours truly, and other contributors. (Along with previous solutions contributors, of course, from where we copied, and plan to continue copying a lot of code and ideas!)

The new central solution works in a very similar fashion as the original ones. We of course synchronise secrets from external third-party services into Kubernetes secrets.

Explaining inner workings by adding a new provider

A very good way of explaining how ESO works would be to just walk you through how to make a new secrets provider contribution, explaining the abstractions and where you would insert new implementations. Let's do that!

If you are not familiar with operators, let's recap really quickly. Very much like Kubernetes controllers, operators are applications running on your cluster that are configured via Kubernetes resources, in this case Custom Resource Definitions. They watch other resources in your cluster to see if they are following the configured state in the CRDs. If they are not, the operator will act on it, and correct it to the declared state. This is a very simple control approach, but very effective, and the foundation of everything Kubernetes.

If you are not familiar with operators, let's recap really quickly. Very much like Kubernetes controllers, operators are applications running on your cluster that are configured via Kubernetes resources, in this case Custom Resource Definitions. They watch other resources in your cluster to see if they are following the configured state in the CRDs. If they are not, the operator will act on it, and correct it to the declared state. This is a very simple control approach, but very effective, and the foundation of everything Kubernetes.

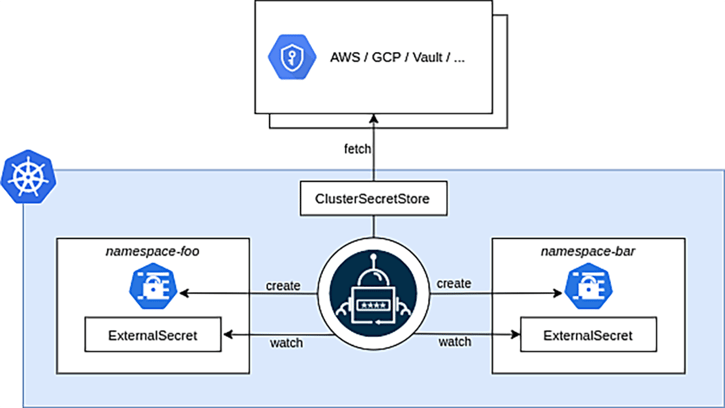

Previously you may have noticed that we have a CRD for SecretStore and another one for the ExternalSecret. The SecretStore CRD lets the operator know where to find the external provider, what are the credentials to call its API, what type of provider it is, and other provider specific information. The ExternalSecret lets the operator know what secrets to sync, what secret version, interval between refreshes, and so on.

You can find our controllers at external-secrets/pkg/controllers, and here you can find the main reconciliation logic used to sync secrets. We have a controller for each of the base Custom Resources, and we use these Custom Resources to configure the state of secrets that we want in the cluster. If we look at the ExternalSecret controller, it first looks for the mentioned CRDs, then creates a client to interact with the external provider API, and then creates or updates a Kubernetes secret in your cluster. It is very simple.

Let's say you want to contribute a new external secret Provider. First you would have to look over our generic Provider interface to understand what you would have to implement in your specific provider struct.

|

package provider |

Here you can see that our Provider interface only has one method that returns a SecretsClient. And a SecretsClient has just two methods: one to get a simple secret, and another one to get a secret map (a secret that contains a JSON string containing multiple key values). So implementing a struct that follows this specification is pretty simple.

|

// You may need to instead create a client from scratch with the methods needed depending on how you are importing the client functionality |

To understand how ESO knows which provider to use in each case, check out the pkg/provider/schema/schema.go implementation. We register our supported stores in an in-memory store map, and we simply get the right one by its name in the controller reconciliation loop.

Other places to look at are:

- apis/externalsecrets/v1alpha1, so you can declare the new store types;

- deploy/crds, so you can update the SecretStore and ClusterSecretStore to include your new provider objects

- And, of course, your pkg/provider/your_provider/your_provider_test.go, since tests will be really important for us to include new providers!

Get involved!

We welcome and encourage all types of contributions to our project! Anything goes, whether it’s technical or not. Help to manually test the solution is also very appreciated, especially in these initial sprints, so we validate and prepare for stable releases.

Right now we are focusing some efforts also on automated e2e testing, and this was only possible because Container Solutions provided some infrastructure for us to use! Any other similar donations would immensely help the project going forward, so keep them coming.

We would also love people to join our bi-weekly community meeting and know more about how this project would fit your needs. You can also join if you have a pull request open with pending challenges; if you think it would be easier to just sync over audio and video, we are totally up to just sharing some screen and going over code. Come help us make this solution be the de facto way of dealing with secrets outside a Kubernetes cluster!

- The repo

- Open issues

- Our website

- Our community meeting notes file

- Videos of our community meetings

- Our channel inside K8S Slack

Previous article

Previous article