For a company that heavily depends on cloud infrastructure we’re pretty much depending on being able to connect to that cloud.

Our internet connection is our lifeline, you could argue. We all use laptops so we sort of expect a wireless connection to be available. Some people would say we have a lousy WiFi connection, although it works on my machine (because I set it up myself ;-). To be honest it’s really something we never set up properly, and which

has been a source of frustration for a long time.

We started out small, like we always do, with a single Unifi AP, then two, and after people (mainly Mac users) started complaining, we decided to add an Airport to our setup. It solved some of the problems, but it broke two modems subsequently. It worked fine though while we were all in a single office room.

Since we moved to a bigger office however, it became a really annoying problem with devices roaming from one AP to another but the Macs preferring to stay connected to the Airport, resulting in them being connected but unable to actually transfer any data.

It was time to do something about it so we decided to remove the Airport and go all in on the Unifi gear, adding a router and a gateway to our local network setup.

I had been running the Unifi Controller app on my laptop which was less than ideal because it would only manage the APs when I was in the office and remembered to turn it on. We thought of installing the controller on a Raspberry Pi, but then we would have another device running in the office that we would need to maintain.

Now the Unifi Controller can be run remotely in the cloud and connect to your local network.

That’s nice, but it would mean I’d still have to set up and maintain an OS on an instance. Even if I’d run it in a container. It would be nice if I could just run a container without worrying about the infrastructure.

Luckily such a thing exists, it’s called Fargate. So I decided to give that a shot. One of the drawbacks of Fargate is that it does not offer any persistent storage, so I need to see if I can work around that somehow.

Get it running using the AWS Console

Getting the controller to run on Fargate turned out to be pretty easy. First I backed up the configuration of the controller I had running locally, so I would not have to go through the whole setup again.

Then I started looking for, and found, a suitable Docker image with a controller. It turns out lots of people put the controller in a Docker container already. I decided to go with Jacob Alberty’s version because it seems popular and up to date.

To get it running using the AWS console, go to the ECS service in a region that supports Fargate (check the Region Table to see which services are supported in which regions). Click on the ‘Create Cluster’ button and choose the ‘Networking only’ option. It should mention ‘Powered by AWS Fargate’.

Click next, provide a name and create a separate VPC so it won’t interfere with any existing resources. The default CIDR block and subnets are fine. Click create and after a minute your cluster should be ready to go.

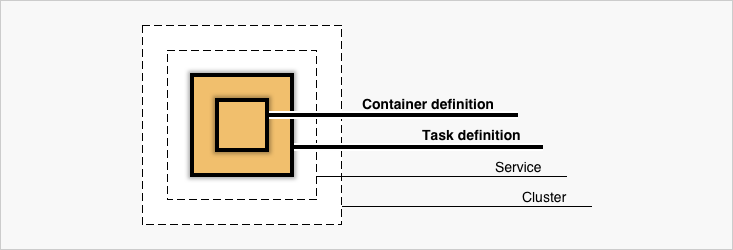

Next step is to create a Task Definition, which in turn will be used for running services on the cluster. So go to Task Definitions and click the button. Select the Fargate launch type compatibility. Provide a name for the task on the next screen, and select awsvpc as the Network Mode. Don’t mess with the Role fields because you don’t need them.

Regarding Task size: I ran the container for a while on my machine and checked the amount of RAM it used. It stayed below half a gigabyte, so I decided to go with this rather conservative setting.

It lasted for three days until a GC spike rendered the container unresponsive. I had to kill it and start anew, this time I gave it 1GB RAM, I have not encountered any problems for a few weeks. I also gave it half a CPU which seemed fine.

Then you need to specify a container definition. Provide a name, an image (I used jacobalberty/unifi:5.6.37 which was what I had running locally at the time). I provided the same Memory limits as I used for the task, because I planned to run only one container in this task.

The ports I used in the port mappings section where 8080, 8443, 3478 and 6789. There’s more ports you can map but I didn’t consider them necessary for my use case. You can find an overview of the ports the controller uses here. I didn’t change any of the other settings in the container definition.

The last step is to create a service on the cluster. For this, navigate to the cluster page and under the Services tab click the create button. Select Fargate as the launch type, select the Task definition you created before and provide a name for the service. Select ‘replica’ as the service type, and specify 1 as the number of tasks to run. Leave the rest as defaults.

On the next screen, select the VPC and subnets you created before. I also used the auto-assign public IP option and chose not to use a load balancer. If you want to connect to the controller using a fixed IP or a DNS name, you need to take the load balancer route. I left the auto scaling option disabled for now.

After you create the service it should start running after a while. It takes some time to pull the image from the Docker Hub. I found it not really intuitive to find out what is going on when the service does not start. Because there are a lot of tabs with states, events and logs you end up clicking and clicking until you finally find a message that actually explains what is wrong.

If you’re lucky however, the task will just show RUNNING after a while and when you click on the Task ID it will show a Public IP. Navigate to this public IP on port 8443 (remember to use HTTPS) and you should be greeted by the Unifi Setup Wizard.

Configuring

Now comes the tricky part.

On the wizard page there’s an option to restore from a previous backup. If you do that, however, I found the application will upload the backup and restart.

Once it terminates, Fargate will start another container with a fresh image, and because there is no persistence you will be presented with the Setup Wizard again.

What worked for me is to pick the default options and only provide the minimum (like admin credentials) you need to get to the main controller screen. From that you can select Settings, Maintenance and restore from there. That worked, as far as I recall. I cannot seem to reproduce it anymore in later versions of the controller, so that might have changed.

Next is to have the controller adopt your APs. I solved this in the end by ssh-ing to each AP and using the `set-inform` command. You do have to wait a bit, refresh the controller webpage and issue the command a second time, but then it works.

Costs

I’ve heard concerns that Fargate is pretty costly. I ran the cluster for a month and I burned about $30, of which ⅔ was on CPU and the rest on memory. For comparison, running a t2.small instance in the Dublin region for a month sets you back about $20, you could easily run the setup there. So it sort of depends on whether you are prepared to spend the money and save time on maintenance.

Improvements

Although the setup works, it’s not very convenient to have to restore a backup every time the server goes down. Also the lack of a DNS name makes you have to login to each AP and adopt it manually, every time you have a new server.

So I decided to redo the setup, and make it reproducible by using Terraform. This also serves as a form of documentation, so other people can see how I set it up.

I introduced a load balancer, and decided to run MongoDB in a separate container in order to have at least some form of persistence in my setup. The DB container should not have to restart each time the controller does, and this might just be all the persistence I need.

The Terraform code is rather complicated, there’s a lot of stuff you need to take care of to get it running so it makes sense to put it all into scripts so you can reliably recreate it. Apart from the cluster, service and tasks there’s a VPC with subnets, gateway and routing tables.

You need a public DNS zone, a private DNS zone for service discovery, discovery services for each service, a load balancer with a target group and listener, a certificate for your load balancer, security groups for handling access and an IAM role to allow your service to call other AWS services. All of the Terraform files are available in this Github repo, I won’t go over them here, they are pretty self explanatory.

I did have some trouble discovering the Mongo service from the Unifi service though. I could not get name resolution to work, and as a result the Controller would not start. In the end I tried with the IP I got from the Mongo task and I edited the Unifi container definition to use that IP in the environment variables. That worked, so it proves communication is possible. I think the Unifi container just has a problem talking to Route53.

However, this did not solve my persistence problems at all. When restoring from a backup, everything is dropped from the database and then the controller restarts, showing the Setup Wizard again.

I suspect that the controller detects some files on start that cause it to repopulate it’s database. However, because it starts with a clean container everytime, it doesn’t detect anything and just launches the Wizard.

Final thoughts

I am sad to say in the end I gave up on running the controller on Fargate. The absence of persistent storage turned out to be too much of a problem. I went and bought a Raspberry Pi instead and got the container up and running within an hour.

I’m prepared to revisit though, because I think Fargate is still a nice solution. Maybe I can share a volume between containers somehow and achieve some sort of persistence that way. What would be really helpful though is EBS support. EFS would also be helpful, but since you can't use `host` or `sourcePath` parameters in the volume definition when using Fargate, this just doesn't work. Still, I hope someday some sort of persistence will be offered so I get a chance to improve on this setup.

Looking for a new challenge? We’re hiring. Take a look at the positions available at the linked image below.

Previous article

Previous article