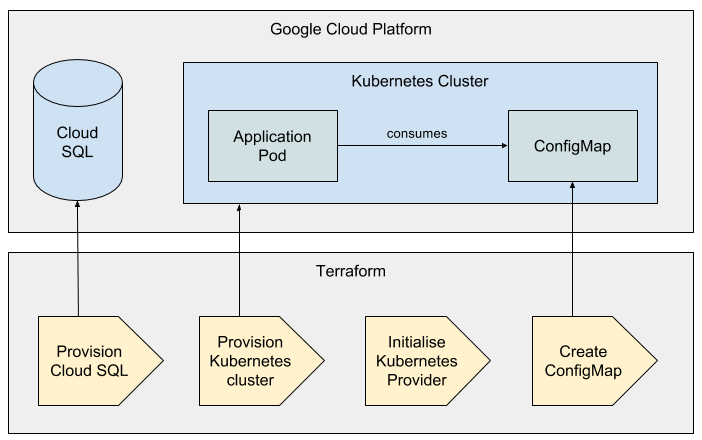

I recently encountered an interesting problem while terraforming Kubernetes clusters on Google Cloud. Our Terraform configurations have some important information which is needed by applications running on the cluster. These are either values for which our Terraform resources are the primary source, or values which are outputs from them.

Here are some examples of the types of values we want to propagate from Terraform resources to application Pods running on Kubernetes:

- Name of the GKE (Google Kubernetes Engine) cluster - specified by Terraform.

- Endpoint of the Cloud SQL database - generated by Terraform

- Static Client IP address - generated by GCP (Google Cloud Platform)

These values are all available while Terraform is running but hard or impossible to access from a Pod running on the Kubernetes cluster. Most surprisingly the cluster name is not available in any kind of metadata on Google cloud but it has to be used when submitting custom container metrics to Google Stackdriver.

To propagate these values conveniently to our application Pods we can use the Terraform Kubernetes provider to create ConfigMaps or Secrets (depending on the sensitivity of the information) in Kubernetes. Applications running on the cluster can then have the values injected as environment variables or files.

The Kubernetes provider is quite new and in a general sense it’s questionable whether you should be provisioning Kubernetes objects using Terraform. You can read more on this from Harshal Shah in this post: http://blog.infracloud.io/terraform-helm-kubernetes/. For our use case however using the Kubernetes provider is perfect.

I was worried about the fact that we have to provision the Kubernetes cluster itself (using the Google Cloud provider container cluster resource), and use the credentials this resource outputs to set up the Kubernetes provider. This kind of inter-provider dependency is somewhat undefined in Terraform but it turns out to work perfectly.

Here are snippets from the code used to do all this.

Google Cloud provider setup and the Kubernetes cluster resource definition:

provider "google" {

region = "europe-west3"

credentials = "itsasecret"

project = "adamsproject"

}

resource "google_container_cluster" "mycluster" {

project = "adamsproject"

name = "mycluster"

zone = "europe-west3-a"

initial_node_count = 1

node_version = "${var.node_version}"

min_master_version = "${var.node_version}"

node_config {

machine_type = "g1-small"

disk_size_gb = 50

}

}

The Kubernetes provider is configured using outputs from the google_container_cluster resource - namely the CA certificate, the client certificate and the client secret key. We have to base64 decode these.

provider "kubernetes" {

host = "${google_container_cluster.mycluster.endpoint}"

client_certificate = "${base64decode(google_container_cluster.primary.master_auth.0.client_certificate)}"

client_key = "${base64decode(google_container_cluster.primary.master_auth.0.client_key)}"

cluster_ca_certificate = "${base64decode(google_container_cluster.primary.master_auth.0.cluster_ca_certificate)}"

}

Once the Kubernetes provider can access our new cluster, this resource definition will create a Secret with our database secrets:

resource "kubernetes_secret" "cloudsql-db-credentials" {

"metadata" {

name = "cloudsql-db-credentials"

}

data {

username = "${var.db_user}"

password = "${var.db_password}"

}

}

We can also create some ConfigMaps to hold other, less sensitive information. Here I'm creating a database connection string to be used by Google CloudSQL Proxy:

resource "kubernetes_config_map" "dbconfig" {

"metadata" {

name = "dbconfig"

}

data = {

dbconnection = "${var.project_id}:${var.region}:${google_sql_database_instance.master.name}"

}

}

I'm not going into the details of how to get this configuration all the way to your application components as that is straightforward once you have ConfigMaps or Secrets created. Take a look at the Kubernetes documentation if you are not familiar with working with ConfigMaps or Secrets.

Summary

Terraform will inevitably become your primary source of truth for many variables in your environment. It will also know about variables that are set by the cloud provider during resource creation, like a random IP address. It's a very elegant way to use Kubernetes Secrets and ConfigMaps to pass these values to your application components which need them, while the Kubernetes provider in Terraform offers a perfect way to create these objects.

Previous article

Previous article