Not so long ago, it was unusual for most employees to work remotely, especially full time. Then, in March 2020, came the global pandemic. Suddenly, kitchen tables, living rooms, and bedrooms were being repurposed as office space.

Slite, a remote-first company that helps distributed teams share ideas, pool their knowledge, and stay in tune across time and space, offers a cloud-based solution to store and connect docs and to build a knowledge base that is fun and engaging to use. The overnight boom in work-at-home posed a huge business opportunity for Slite. But it also brought an immediate challenge: How to scale up, fast, to meet a tsunami of consumer demand.

The solution had to be sustainable, too: all signs indicate that the wave of interest in remote work will not recede with the virus threat. More than 80% of employers in a survey by Gartner released in July say they plan to continue to allow remote work at least part of the time—and 42% say they plan to accommodate full-time remote work.

This case study explains how Slite worked with Container Solutions and Google Cloud to prepare itself for exponential growth.

Making the case for GitOps

Together with Container Solutions, Slite found the key to its efforts to scale up their delivery capabilities in a set of practices known as GitOps. It’s an entirely new kind of operational architecture that has found wide adoption among tech companies, especially in the Cloud Native space.

The core idea behind GitOps is to have the infrastructure setup of your application saved to a version-control system like Git in a way that lets it be deployed easily and automatically to any given environment.

By using Git for your deployments, you will have a history of changes with a message for each of them, and most Git providers like GitHub already have built-in user and access management. This will take care of some security issues and make it easy for non-engineers to audit your deployment history.

In practice, most organisations will use GitOps with Dockerised applications that are supposed to run on a Kubernetes cluster with deployment manifests (Kustomize or Helm). Still, in theory, this could work with any technology that allows for programmable infrastructure.

The Google Cloud Platform (GCP) offers various Cloud Native solutions, like Google Kubernetes Engine (GKE), user and role management, and many more tools to make it quick and easy to get started fully Cloud Native.

Situation summary

When Slite brought the Container Solutions team on, it had six engineers actively involved in developing the platform just as remote work during the pandemic was fueling a huge surge in demand. It needed to scale infrastructure effectively and to impress new customers with increased business value and powerful new features, fast.

The company’s product consisted of about 15 microservices, more or less independent from one another, running on two Kubernetes clusters, for staging and production. Slite had chosen Kubernetes clusters for their ease of use and availability. And the company chose GKE for user and role management and the simple integration of its deployment scripts because, as one Slite engineer said, it didn’t “get in the way.” With the infrastructure taken care of, the engineers could focus on increasing business value.

Additionally, Slite had a separate GCP project with two Kubernetes clusters running development environments for its engineers to experiment and work on new features.

At the start of our engagement, Slite had some automation in its deployment processes. It used GitHub Actions scripts to build a Docker image and store it in the Google Image Registry. To deploy the new image, another GitHub Actions script had to be started manually to make sure the image had been successfully built and published previously. The deployment itself was handled via Helm, making it easy to share global configurations and roll back to an older version. The team managed to deploy about four times a day—not enough to keep up with the new surge in demand for its services.

Slite uses different services for data storage, such as SQL, BigQuery, and CloudStorage, depending on the use case.

Embracing GitOps

Since Slite had already split up its product into microservices, it was in a good place to leverage the advantages of having a fully automated, independent delivery process. It was already using GitHub Actions to build and deploy, so it felt natural to use the existing tech stack instead of extending it with more tooling, which would have required more time and effort.

Additionally, using Git, which was already housing all the source code, as the starting point allowed the team to standardise the release process through all services, to track changes through all environments, and to see at a glance which version and configuration were running on production at the time.

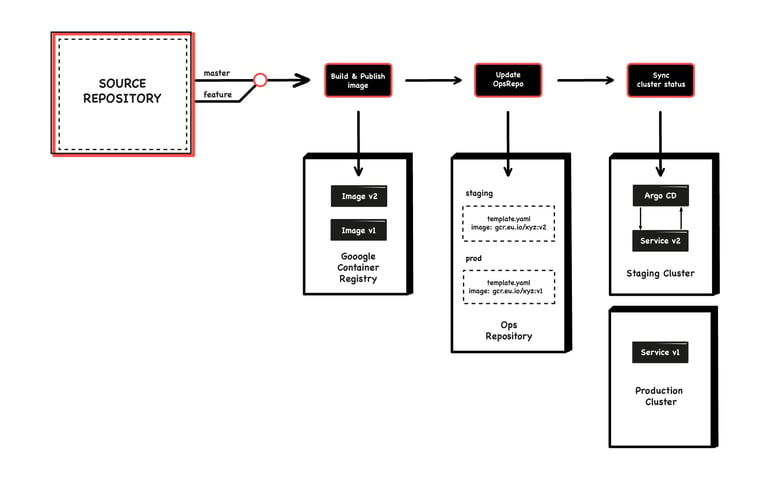

And finally, this setup could be easily replicated as a template for new projects and services when the company grows or when teams specialise. (The following diagram shows what the whole thing looks like.)

Google Cloud solutions

In the new GitOps workflow, the deployment process would be triggered by a change on the master branch to the source repository, usually through a merged pull request. That would then trigger the existing GitHub Actions to build a Docker image from the source code and publish it to the Google Container Registry.

With that done, the repository that houses the Helm charts would be updated. Changes to the source code would usually involve modifying the image tag and/or version number. For changes to the service's configuration, the Helm chart itself might need to be altered. In any case, these modifications would be easy to audit by checking the diff.

On the GKE cluster itself, ArgoCD manages the lifecycle of Slite’s services. ArgoCD is the main tool to facilitate GitOps, and it’s easy to install with only a Kubernetes manifest or its own operator. For Slite, the operator was not necessary, as the installation on GKE was simple and straightforward.

ArgoCD takes a configuration manifest like Helm or Kustomize and applies it to a Kubernetes cluster. If there is an issue during deployment, ArgoCD will continue to sync the current cluster state to the desired state, as defined in the ops repository.

Additionally, ArgoCD brings an intuitive graphical user interface (GUI) that can be accessed through the browser to see and manage the current state of the cluster and the resources managed by ArgoCD. To ensure only people with the correct permissions can see the cluster states, Slite has integrated the GCP Identity-Aware Proxy to secure access to ArgoCD.

With the changes in place, Slite achieved a clean split between the image build and deployment processes. ArgoCD and especially its GUI have also lightened the cognitive load for engineers to monitor their applications' deployment status, allowing more focus on creating business value. A side benefit is that engineers are more confident about owning their services all the way to production, as they can now spot, mitigate, and fix problems in production quickly and independently.

Container Solutions’s role

Container Solutions helped Slite automate many routine tasks and equipped the growing company with the know-how, best practices, and hands-on training to establish GitOps practices. It worked hand-in-hand with Slite’s engineering team to find the optimal way to implement the GitOps workflow. The underlying Google Cloud technology solutions were fully implemented in just >six weeks, as Slite hired more engineers.

Results

The changes have helped Slite further accelerate deployment without putting more infrastructure workload on the engineering team. Based on this success, Slite is committed to the GitOps pattern and further improving its tooling.

"Container Solutions's expertise got us up and running on GitOps. It eased team scaling and enabled much more frequent releases to our customers,” said Arnaud Rinquin, a senior product engineer at Slite.

The team is now so confident about the deployment process that they have fully automated delivery from the source code to pre-production, which allows them to deploy up to 20 times per day. Simultaneously, Slite has expanded its engineering team from six to 16 without losing momentum, thanks to adopting easy-to-use, transparent, and scalable CI/CD. A bug fix can now be in production as soon as 15 minutes after the bug was spotted.

Adopting GitOps for Google Cloud keeps Slite on the growth path and enables it to offer a SaaS product that amazes its growing user base.

Previous article

Previous article