Working in Cloud Native consulting, I’m often asked about who should do various bits of ‘the platform work’.

I’m asked this in various forms and at various levels, but the title’s question (Who should write the Terraform?) is a fairly typical one. Consultants are often asked simple questions that invite simple answers, but it’s our job to frustrate our clients, so I invariably say “it depends”.

The reason it depends is that the answers to these seemingly simple questions are very context-dependent. Even if there is an ‘ideal’ answer, the world is not ideal, and the best thing for a client at that time might not be the best thing for the industry in general.

So here, I attempt to lay out the factors that help me answer those questions as honestly as possible. But before that, we need to lay out some background. Here’s an overview of the flow of the piece:

- What is a platform?

- How we got here

- Coders and Sysadmins became…

- Dev and Ops, but silos and slow time to market, so…

- DevOps, but not practical, so…

- SRE and Platforms

- The factors that matter

- Non-negotiable standards

- Developer capability

- Management capability

- Platform capability

- Time to market

What is a Platform?

Those old enough to remember when the word ‘middleware’ was everywhere will know that many industry terms are so vague or generic as to be meaningless. However, for ‘platform’ work we have a handy definition, courtesy of Team Topologies:

The purpose of a platform team is to enable stream-aligned teams to deliver work with substantial autonomy.

The stream-aligned team maintains full ownership of building, running, and fixing their application in production.

The platform team provides internal services to reduce the cognitive load that would be required from stream-aligned teams to develop these underlying services.

Team Topologies, Matthew Skelton and Manuel Pais

A platform team, therefore, (and putting it crudely) builds the stuff that lets others build and run their stuff.

So… is the Terraform written centrally, or by the stream-aligned teams?

To explain how I would answer that, I’m going to have to do a little history.

How We Got Here

Coders and Sysadmins

In simpler times–after the Unix epoch and before the dotcom boom–there were coders and there were sysadmins. These two groups speciated from the generic ‘computer person’ that companies found they had to have on the payroll (whether they liked it or not) in the 1970s and 80s.

As a rule, the coders liked to code and make computers do new stuff, and the sysadmins liked to make sure said computers worked smoothly. Coders would eagerly explain that with some easily acquired new kit, they could revolutionise things for the business, while sysadmins would roll their eyes and ask how this would affect user management, or interoperability, or stability, or account management, or some other boring subject no-one wanted to hear about anymore.

I mention this because this pattern has not changed. Not one bit. Let’s move on.

Dev and Ops

Time passed, and the Internet took over the world. Now, we had businesses running websites as well as their internal machines and internal networks. Those websites were initially given to the sysadmins to run. Over time, these websites became more and more important for the bottom line, so eventually, the sysadmins either remained sysadmins and looked after ‘IT’, or became ‘operations’ (Ops) staff and looked after the public-facing software systems.

Capable sysadmins had always liked writing scripts to automate manual tasks (hence the t-shirt below), and this tendency continued (sometimes) in Ops, with automation becoming the defining characteristic of modern Ops.

Eventually, a rich infrastructure emerged around the work. ‘Pipelines’ started to replace ‘release scripts’, and concepts like ‘continuous integration’, and ‘package management’ arose. But, we’re jumping ahead a bit; this came in the DevOps era.

Coders, meanwhile, spent less and less time doing clever things with chip registers and more and more time wrangling different software systems and APIs to do their business’s bidding. They stopped being called ‘coders’ and started being called ‘developers’.

So ‘Devs’ dev’d, and ‘Ops’ ops’d.

These groups grew in size and proportion of the payroll as software started to ‘eat the world’.

In reality, of course, there was a lot of overlap between the two groups, and people would often move from one side of the fence to the other. But the distinction remained, and became organisational orthodoxy.

Dev and Ops Inefficiencies

As the Dev and Ops pattern became bedded into organisation, people noted some inefficiencies with this state of affairs:

- Release overhead

- Misplaced expertise

- Cost

First, there was a release overhead as Dev teams passed changes to Ops. Ops teams typically required instructions for how to do releases, and in a pre-automation age these were often prone to error without app- or even release-specific knowledge. I was present about 15 years ago, in a very fractious argument between a software supplier and its client’s Ops team after an outage. The Ops team attempted to follow instructions for a release, which resulted in an outage, because instructions were not followed correctly. There was much swearing as the Ops team remonstrated that the instructions were not clear enough, while the Devs argued that if the instructions had been followed properly, then it would have worked. Fun.

Second, Ops teams didn’t know in detail what they were releasing, so couldn’t fix things if they went wrong. The best they could do was restart things and hope they worked.

Third, Ops teams looked expensive to management. They didn’t deliver ‘new value’, just farmed existing value, and appeared slow to respond and risk-averse.

I mention this because this pattern has not changed. Not one bit. Let’s move on.

These and other inefficiencies were characterised as ‘silos’–unhelpful and wasteful separations of teams for (apparently) no good purpose. Frictions increased as these mismatches were exacerbated by embedded organisational separation.

The solution was clearly to get rid of the separation: no more silos!

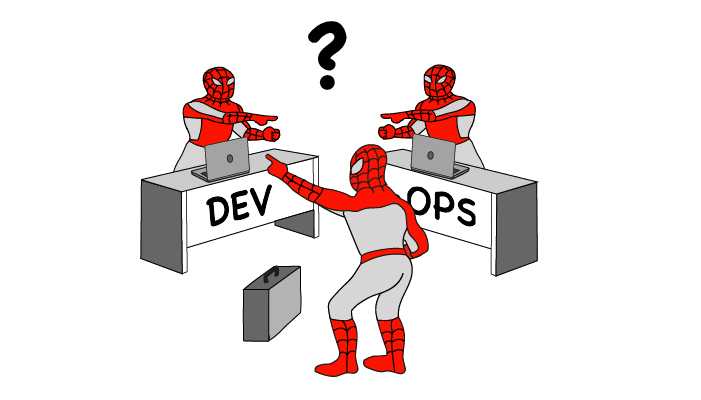

Enter DevOps

The ‘no more silos’ battle cry got a catchy name–DevOps. The phrase was usefully vague and argued over for years, just as Agile was and is (see here). DevOps is defined by Wikipedia as ‘a set of practices that combines software development (Dev) and IT operations (Ops)’.

At the purest extreme, DevOps is the movement of all infrastructure and operational work and responsibilities (ie ‘delivery dependencies’) into the development team.

This sounded great in theory. It would:

- Place the operational knowledge within the development team, where its members could more efficiently collaborate in tighter iterations.

- Deliver faster–no more waiting weeks for the Ops team to schedule a release, or waiting for Ops to provide some key functionality to the development team.

- Bring the costs of operations closer to the value (more exactly: the development team bore the cost of infrastructure and operations as part of the value stream), making P&L decisions closer to the ‘truth’.

DevOps Didn’t

But despite a lot of effort, the vast majority of organisations couldn’t make this ideal work in practice, even if they tried. The reasons for this were systemic, and some of the reasons are:

- Absent an existential threat, the necessary organisational changes were more difficult to make. This constraint limited the willingness or capability to make any of the other necessary changes.

- The organisational roots of the Ops team were too deep. You couldn’t uproot the metaphorical tree of Ops without disrupting the business in all sorts of ways.

- There were regulatory reasons to centralise Ops work which made distribution very costly.

- The development team didn’t want to–or couldn’t–do the Ops work.

- It was more expensive. Because some work would necessarily be duplicated, you couldn’t simply distribute the existing Ops team members across the development teams, you’d have to hire more staff in, increasing cost.

I said ‘the vast majority’ of organisations couldn’t move to DevOps, but there are exceptions. The exceptions I’ve seen in the wild implemented a purer form of DevOps when there existed:

- Strong engineering cultures where teams full of T-shaped engineers want to take control of all aspects of delivery

- No requirement for centralised control (eg regulatory/security constraints)

and/or

- A gradual (perhaps guided) evolution over time toward the breaking up of services and distribution of responsibility

and/or

- Strong management support and drive to enable

The most famous example of the ‘strong management support’ is Amazon, where so-called pizza teams must deliver and support their products independently. (I’ve never worked for Amazon so I have no direct experience of the reality of this). This, notably, was the product of a management edict to ensure teams operated independently.

When I think of this DevOps ideal, I think of a company with multiple teams each independently maintaining their own discrete marketing websites in the cloud. Not many businesses have that kind of context and topology.

Enter SRE and Platforms

One of the reasons listed above for the failure of DevOps was the critical one: expense.

Centralisation, for all its bureaucratic and slow-moving faults, can result in vastly cheaper and more scalable delivery across the business. Any dollar spent at the centre can save n dollars across your teams, where n is the number of teams consuming the platform.

The most notable example of this approach is Google, who have a few workloads to run, and built their own platform to run them on. Kubernetes is a descendant of that internal platform.

It’s no coincidence that Google came up with DevOps’s fraternal concept: SRE. SRE emphasised the importance of getting Dev skills into Ops rather than making Dev and Ops a single organisational unit. This worked well at Google, primarily because there was an engineering culture at the centre of the business, and an ability to understand the value of investing in the centre rather than chasing features. Banks (who might well benefit from a similar way of thinking) are dreadful at managing and investing in centralised platforms, because they are not fundamentally tech companies (they are defenders of banking monopoly licences, but that’s a post for another day, also see here).

So across the industry, those that might have been branded sysadmins first rebranded themselves as Ops, then as DevOps, and finally SREs. Meanwhile, they’re mostly the same people doing similar work.

Why the History Lesson?

What’s the point of this long historical digression?

Well, it’s to explain that, with a few exceptions, the division between Dev and Ops, and between centralisation and distribution of responsibility has never been resolved. And the reasons the industry seems to see-saw are the same reasons that the answer to the original question is never simple.

Right now, thanks to the SRE movement (and Kubernetes, which is a trojan horse leading you away from cloud lock-in), there is a fashion-swing back to centralisation. But that might change again in a few years.

And it’s in this historical milieu that I get asked questions about who should be responsible for what, with respect to work that could be centralised.

The Factors

Here are the factors that play into the advice that I might give to these questions, in rough order of importance.

Factor One: Non-Negotiable Standards

If you have standards or practices that must be enforced on teams for legal, regulatory, or business reasons, then at least some work needs to be done at the centre.

Examples of this include:

- Demonstrable separation of duties between Dev and Ops

- User management and role-based access controls

Performing an audit on one team is obviously significantly cheaper than auditing a hundred teams. Further, with an audit, the majority of expense is not in the audit but the follow-on rework. The cost of that can be reduced significantly if a team is experienced at knowing from the start what’s required to get through an audit. For these reasons, the cost of an audit across your 100 dev teams can be more than 100x the cost of a single audit at the centre.

Factor Two: Engineer Capability

Development teams vary significantly in their willingness to take on work and responsibilities outside their existing domain of expertise. This can have a significant effect on who does what.

Anecdote: I once worked for a business that had a centralised DBA team, that managed databases for thousands of teams. There were endless complaints about the time taken to get ‘central IT’ to do their bidding, and frequent demands for more autonomy and freedom.

A cloud project was initiated by the centralised DBA team to enable that autonomy. It was explained that becauseteams could now provision their own database instances in response to their demands, they would no longer have a central DBA team to call on.

Cue howls of despair from the development teams that they need a centralised DBA service, as they didn’t want to take this responsibility on, because they don’t have the skills.

Another example is embedded in the title question about Terraform. Development teams often don’t want to learn the skills needed for a change of delivery approach. They just want to carry on writing in whatever language they were hired to write in.

This is where organisational structures like ‘cloud native centres of excellence’ (who just ‘advise’ on how to use new technologies), or ‘federated devops teams’ (where engineers are seconded to teams to spread knowledge and experience) come from. The idea with these ‘enabling teams’ is that once their job is done they are disbanded. Anyone who knows anything about political or organisational history knows that these plans to self-destruct often don’t pan out that way, and you’re either stuck with them forever, or some put-upon central team gets given responsibility for the code in perpetuity.

Factor Three: Management Capability

While the economic benefits of having a centralised team doing shared work may seem intuitively obvious, senior management in various businesses are often not able to understand its value, and manage it as a pure cost centre.

This is arguably due to assumptions arising out of internal accounting assumptions. Put simply, the value gained from centralised work is not traced back to profit calculations, so is seen as pure cost. (I wrote a little about non-obvious business value here.)

In companies with competent technical management, the value gained from centralised work is (implicitly, due to an understanding of the actual work involved) seen as valuable. This is why tech firms such as Google can successfully manage a large-scale platform, and why it gave birth to SRE and Kubernetes, two icons of tech org centralisation. It’s interesting that Amazon–with its roots in retail, distribution, and logistics–takes a radically different distributed approach.

If your organisation is not capable of managing centralised platform work, then it may well be more effective to distribute the work across the feature teams, so that cost and value can be more easily measured and compared.

Factor Four: Platform Team Capability

Here we are back to the old fashioned silo problem. One of the most common complaints about centralised teams is that they fail to deliver what teams actually need, or do so in a way that they can’t easily consume.

Often this is because of the ‘non-negotiable standards’ factor above resulting in security controls that stifle innovation. But it can also be because the platform team is not interested, incentivised, or capable enough to deliver what the teams need. In these latter cases, it can be very inefficient or even harmful to get them to do some of the platform work.

This factor can be mitigated with good management. I’ve seen great benefits from moving people around the business so they can see the constraints other people work under (a common principle in the DevOps movement) rather than just complain about their work. However, as we’ve seen, poor management is often already a problem, so this can be a non-starter.

Factor Five: Time to Market

Another significant factor is whether it’s important to keep the time to delivery low. Retail banks don’t care about time to delivery. They may say they do, but the reality is that they care far more about not risking their banking licence, not causing outages that attract the interest of regulators. In the financial sector, hedge funds, by contrast, might care very much about time to market as they are unregulated and wish to take advantage of any edge they might have as quickly as possible. Retail banks tend towards centralised organisational architectures, while hedge funds devolve responsibility as close to the feature teams as possible.

So, Who Should Write the Terraform?

Returning to the original question, the question of ‘who should write the Terraform?’ can now be more easily answered, or at least approached. Depending on the factors discussed above, it might make sense for them to be either centralised or distributed.

More importantly, by not simply assuming that there is a ‘right’ answer, you can make decisions about where the work goes with your eyes open about what the risks, trade-offs, and systemic preferences of your business are.

Whichever way you go, make sure that you establish which entity will be responsible for maintaining the resulting code as well as producing it. Code, it is important to remember, is an asset that needs maintenance to remain useful and if this is ignored there could be great confusion in the future.

Previous article

Previous article