When managing Kubernetes clusters, cluster administrators need to ensure the overall stability of the system. To accomplish this it is necessary to avoid disruptions to the control plane, and also avoid any risks of users being able to escalate their privileges thus causing further problems. With this in mind, protecting the kube-system namespace and enforcing pod security policies to run payloads with just the necessary access is a must.

To accomplish our objective we took a look at the Kubernetes native way to do it, but found that RBAC is not enough to cover some use cases. Ultimately we decided to use Gatekeeper because it allows us to add “deny” rules for authorisation and also allows us to enforce pod security policies for payloads in the cluster. Gatekeeper really shines for enforcing best practices, making it a natural fit for what we wanted to achieve.

This post explains the arguments that make Gatekeeper a good option to work alongside RBAC to improve the Kubernetes authorisation process using validating webhooks. We also show a couple of examples on how to protect the kube-system namespace and how to enforce some pod security policies.

The problem

When providing Kubernetes clusters, it is important to ensure stability. Often you want to grant users enough permissions to have freedom, but you also want to protect clusters from unexpected changes and outages. To achieve that, unnecessary interactions with the kube-system namespace should be blocked in order to protect that namespace from both user error and malicious actors.

It is also necessary to enforce pod security policies for payloads in the cluster.

Kubernetes provides tools like RBAC to handle access to clusters through Roles and ClusterRoles. The problem with that approach is that RBAC is purely additive, so cluster administrators need to define a detailed list of permissions an end-user can access. In this case, we want to provide access to the whole cluster except for the kube-system—this wouldn’t be possible using RBAC because it doesn’t have a “deny” option.

Kubernetes used to provide Pod Security Policies to help enforce security best practices for pods in the cluster, but these are deprecated in v1.21. Fortunately we have Open Policy Agent (OPA), an open source general purpose policy engine with a Kubernetes specific implementation called Gatekeeper. This works as a perfect replacement for Pod Security Policies.

Gatekeeper provides a library with common policies. The library helped us to avoid reinventing the wheel constantly, but if you have very specific requirements it is also possible to create custom policies.

To solve the access limitations inherent in RBAC, OPA/Gatekeeper is a good option. It can enforce different types of access in a more configurable way, and allows the enforcing of multiple types of pod security policies to prevent permission escalation problems.

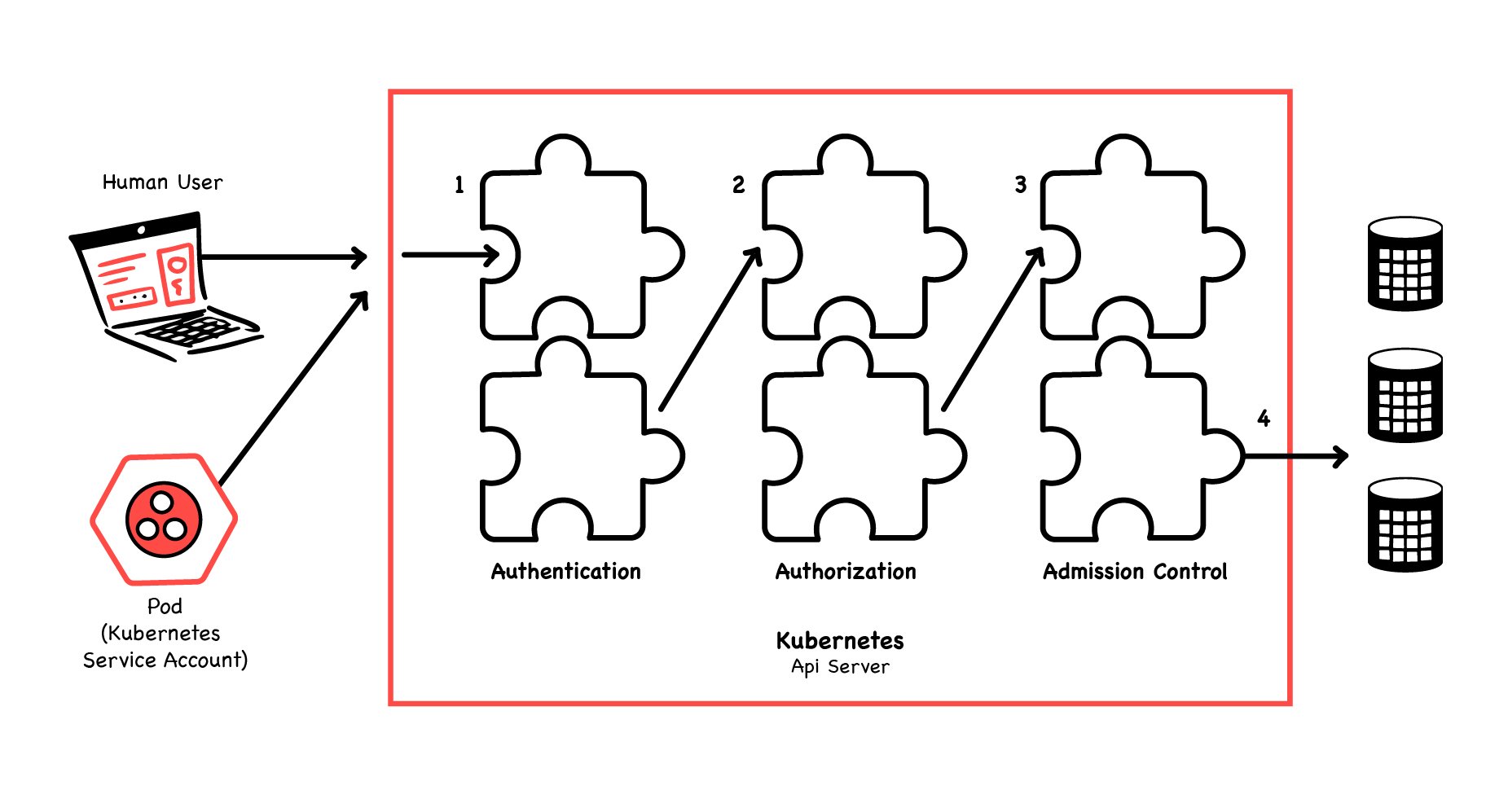

Authentication and authorisation

Whilst it is an important part of cluster administration, authentication is outside of the scope of this post. We assume that every request is authenticated from now on.

Providing access to users for different resources in the cluster is one of the main concerns for cluster administrators. In this case, users should have full access to the cluster and just be excluded from using the kube-system namespace. This is usually a complex task in practice because cluster administrators need to keep track of permissions for each user or group of users.

Using RBAC, users can be provided with cluster-admin access which gives them enough flexibility to use the cluster. The problem is that this provides access to the kube-system namespace meaning they could break the cluster.

Protect the control plane

To avoid unnecessary disruptions in the control plane, access to the kube-system namespace should be blocked. Only users that are part of the system:* group or any user that is used by a cloud provider to manage control plane resources, should have access to the kube-system namespace and its resources.

Restricting access to the kube-system namespace is a delicate task because inadvertently blocking users that manage the control plane could cause unexpected failures in the cluster. If for some reason any of the control plane controllers stop having access to that namespace it would end up breaking the cluster, instead of protecting it.

Cluster users should be allowed to deploy anything on the Kubernetes cluster, but cluster administrators need to ensure they follow good practices by enforcing policies.

The solution — Validating Admission Webhooks

Admission Controllers seem like a natural way of solving all the problems mentioned before because authentication and authorisation are already handled when the request reaches the Admission Webhook. When looking for a compatible implementation, the tool that stands out is again Gatekeeper.

Validating Admission webhooks are part of the Admission Controllers implemented in Kubernetes. These are responsible for intercepting requests to the kube-api and can allow or deny access to the request based on its content.

To have a working Validating Admission Webhook it is necessary to also have an Admission webhook server. In this case, the server is Gatekeeper, and Kubernetes is informed of it through the use of a ValidatingWebhookConfiguration object, which is created when installing Gatekeeper.

The ValidatingWebhookConfiguration object has a field called rules, which is used to define which requests should be validated with the webhook. These rules can match apiGroups, apiVersions, resources, or operations. Read more about ValidatingWebhookConfiguration to understand how to customise its behaviour.

To make sense of how Validating Webhooks interacts with OPA take a look at the architecture presented in this blog post.

One thing to notice is that the request goes through the Validating Webhook only if it’s authenticated and the request was previously authorised by RBAC.

Open Policy Agent

Open Policy Agent (OPA) is an open-source, platform-agnostic, policy engine that enables users to create policies as code using OPA’s policy language Rego. In combination with admission controllers in Kubernetes, OPA gives cluster administrators multiple configuration options to create custom policies.

Gatekeeper Iis the OPA implementation that integrates with Kubernetes. It allows users to create and enforce policies by introducing a custom resource called ConstraintTemplate, which allows cluster administrators to extend the Kubernetes API by creating custom resources to enforce custom policies.

Constraint templates and Constraints

In order to enforce a new policy, it is first necessary to define it using a ConstraintTemplate containing the rego code that validates the constraint.

To enforce the policy, create a custom resource based on the ConstraintTemplate previously created—this is also known as a “Constraint”. The creation of the constraint informs Gatekeeper that it needs to be enforced.

In the constraint, define a match field, which is composed of a Kubernetes selector that helps to define elements to which a given constraint is applied, including kinds, namespace, labelSelector, and others.

Considerations

While the described scenario requires enforcing multiple policies on the kube-system namespace, usually Gatekeeper is used for other purposes and the general recommendation is to avoid adding restrictions to the kube-system namespace because these can cause control plane disruptions.

As a rule of thumb always ensure that the policies enforced don’t block the deployments of critical workloads or dependencies in the clusters. Similarly, if there are third-party controllers interacting with the cluster, ensure access to the resources they need to work as expected.

How to use Gatekeeper

Let’s go through an example of policy enforcement in Kubernetes. For this, the only requirement is a healthy Kubernetes cluster.

Install Gatekeeper

To deploy gatekeeper 3.4

kubectl apply -f https://raw.githubusercontent.com/open-policy-agent/gatekeeper/release-3.4/deploy/gatekeeper.yaml

Create a Constraint Template with Rego

cat <<EOF | kubectl create -f -

apiVersion: templates.gatekeeper.sh/v1beta1

kind: ConstraintTemplate

metadata:

name: denyrestrictednamespaceaccess

spec:

crd:

spec:

names:

kind: DenyRestrictedNamespaceAccess

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package DenyNamespace

violation[{"msg": msg}] {

not startswith(input.review.userInfo.username, "system:")

msg := sprintf("you cant use namespace: %v", [input.review.object.metadata.namespace])

}

EOF

This creates a Custom Resource Definition (CRD) called DenyRestrictedNamespaceAccess that allows us to enforce the policy. The policy says it’s a violation only if the user making the request doesn’t have the system: prefix.

Policies get to review an AdmissionReview object and return a similar object containing a field “allowed” that could be set to true or false. Read more about admission controllers.

Create a constraint

cat <<EOF | kubectl create -f -

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: DenyRestrictedNamespaceAccess

metadata:

name: denyrestrictednamespaceaccess

spec:

match:

namespaces:

- kube-system

rules:

- operations: ["*"]

EOF

The match field specifies that the kube-system namespace is the one on which to enforce this policy.

The operations field indicates that it enforces the policy for every operation to the namespace, i.e. CREATE, UPDATE, DELETE. Remember that this kind of webhook doesn't allow filtering of GET requests.

To check if the policy is enforced run the following command:

kubectl create serviceaccount testsa -n kube-system

Which should return:

[denyrestrictednamespaceaccess] you cant use namespace: kube-system

Create a Pod Security Policy Constraint Template with Rego

This is an example from a library of commonly used policies from Gatekeeper.

cat <<EOF | kubectl create -f -

apiVersion: templates.gatekeeper.sh/v1beta1

kind: ConstraintTemplate

metadata:

name: k8spspallowprivilegeescalationcontainer

annotations:

description: Controls restricting escalation to root privileges.

spec:

crd:

spec:

names:

kind: K8sPSPAllowPrivilegeEscalationContainer

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package k8spspallowprivilegeescalationcontainer

violation[{"msg": msg, "details": {}}] {

c := input_containers[_]

input_allow_privilege_escalation(c)

msg := sprintf("Privilege escalation container is not allowed: %v", [c.name])

}

input_allow_privilege_escalation(c) {

not has_field(c, "securityContext")

}

input_allow_privilege_escalation(c) {

not c.securityContext.allowPrivilegeEscalation == false

}

input_containers[c] {

c := input.review.object.spec.containers[_]

}

input_containers[c] {

c := input.review.object.spec.initContainers[_]

}

# has_field returns whether an object has a field

has_field(object, field) = true {

object[field]

}

EOF

The policy reviews containers to see if these containers don’t have a security context or if the security context is set to true.

Create a violation pod

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: static-web-without-security-context-0

spec:

containers:

- name: web

image: nginx

ports:

- name: web

containerPort: 80

protocol: TCP

EOF

Before actually enforcing the policy, let’s create a pod without a security context, to illustrate how policies are applied and how audit features work.

Create Pod Security Policy (PSP) constraint

cat <<EOF | kubectl create -f -

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sPSPAllowPrivilegeEscalationContainer

metadata:

name: psp-allow-privilege-escalation-container

spec:

match:

kinds:

- apiGroups: [""]

kinds: ["Pod"]

excludedNamespaces: ["kube-system"]

EOF

The constraint enforces that the policy is applied to all pods. It also excludes any pod running in the kube-system namespace.

Validating the policy

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: static-web-without-security-context-1

spec:

containers:

- name: web

image: nginx

ports:

- name: web

containerPort: 80

protocol: TCP

EOF

Trying to create the above pod should return the following result. This pod is the same as the one already created, but this time it is blocked by the webhook:

[psp-allow-privilege-escalation-container] Privilege escalation container is not allowed: web

In order to successfully create the pod, update the manifest to add a security context to the container.

securityContext:

allowPrivilegeEscalation: false

Audit

Gatekeeper allows users to see pre-existing resources that violate any existing policy. In this case, this cluster was previously running a pod without a securityContext, meaning any user could see the policy violation triggered before, using the following command:

kubectl get constraint -o json | jq '.items[].status.violations'

[

{

"enforcementAction": "deny",

"kind": "Pod",

"message": "Privilege escalation container is not allowed: web",

"name": "static-web-without-security-context-0",

"namespace": "default"

},

]

Conclusion

Providing a flexible, secure and highly-available Kubernetes cluster is a hard task, involving many challenges. We’ve looked at how to protect the control plane resources, and how to prevent permission escalation problems on pods.

In the process we found some of the limitations RBAC has for Kubernetes and addressed them by using Gatekeeper to add “deny” rules based on custom requirements. Please keep in mind that cluster administrators should review in detail which users should, or should not, have access to the kube-system.

Limiting access to the kube-system is a really specific requirement, and it is generally recommended to avoid adding constraints to the kube-system namespace. The post shows how to do this as a reference but keep in mind that this could lead to control plane disruptions. Make sure any control plane user can work as expected.

The two examples provided allow cluster administrators to deny access to a namespace and to prevent privilege-escalation for the containers. These examples are enough to illustrate the idea and should give cluster administrators a starting point to create custom policies.

It is important to keep in mind that when the AdmissionReview arrives at Gatekeeper the request has already been authenticated and authorised, so those topics are out of scope in this post.

OPA/Gatekeeper is a great option to handle multiple kinds of policies in a Kubernetes cluster. Using Constraint Templates and Constraints cluster administrators can define which resources the constraints are applied to. Official documentation about Rego and Gatekeeper definitely helps to better understand and create custom policies.

Previous article

Previous article